Complete List

- [2026-02-19] Singularity Rootkit

- [2026-01-17] Digital Signatures on PDF

- [2026-01-09] 2025 - The End of Two OS

- [2026-01-08] New Year, New Desktop

- [2025-12-11] The Unintended Consequence of Replacing CRLF (\r\n) to LF (\n) on PNG Images

- [2025-11-23] Braille in Pokémon Sapphire and Ruby Game and Manga

- [2025-11-21] Firefox - MP4 Cannot be Decoded

- [2025-11-18] Anime and Manga Tech Gallery

- [2025-11-18] Fedora 43: Random Request to Access Macbook Pro Microphone

- [2025-11-14] Random Photos

- [2025-11-08] How Linux Executes Executable Scripts

- [2025-10-31] Hangul - Unicode Visualiser

- [2025-10-06] UTF-8 Explained Simply - The Best Video on UTF-8

- [2025-10-06] Render Archaic Hirigana

- [2025-10-03] Migrating Away Github: One Small Step To Migrate Away From US BigTech

- [2025-10-02] Binary Dump via GDB

- [2025-08-27] Gundam With Decent Portrayal of Code

- [2025-07-24] Incorrect Translation of a Math Problem in a Manga

- [2025-07-23] Rational Inequality - Consider if x is negative

- [2025-07-04] The Issue With Default in Switch Statements with Enums

- [2025-05-24] 2025 Update

- [2025-05-06] DuoLingo Dynamic Icons on Android

- [2025-04-14] Behavior of Square Roots When x is between 0 and 1

- [2025-04-08] 4 is less than 0 apparently according to US Trade Representative

- [2025-03-16] Row Major v.s Column Major: A look into Spatial Locality, TLB and Cache Misses

- [2025-03-07] The Bit Size of the Resulting Matrix

- [2025-03-07] Compiling and Running AARCH64 on x86-64 (amd64)

- [2025-02-23] top and Kernel Threads

- [2025-02-11] this: the implicit parameter in OOP

- [2025-01-28] Software Version Numbers are Weird

- [2025-01-23] view is just vim in disguise

- [2025-01-21] My Thoughts on the Future of Firefox

- [2025-01-15] The Sign of Char in ARM

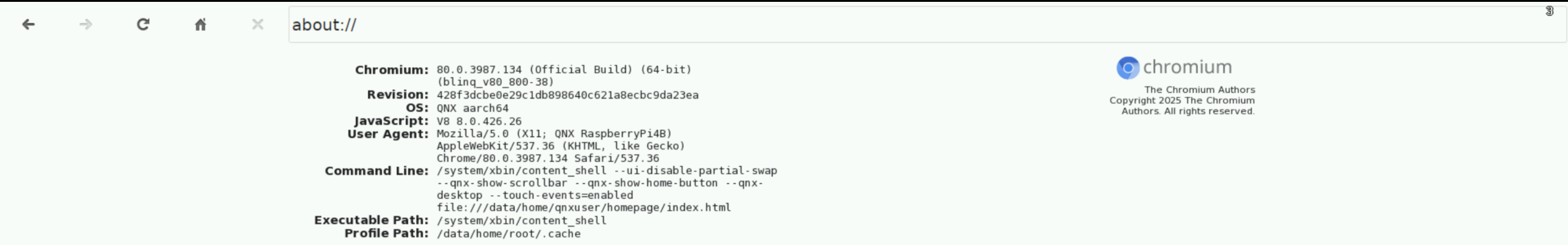

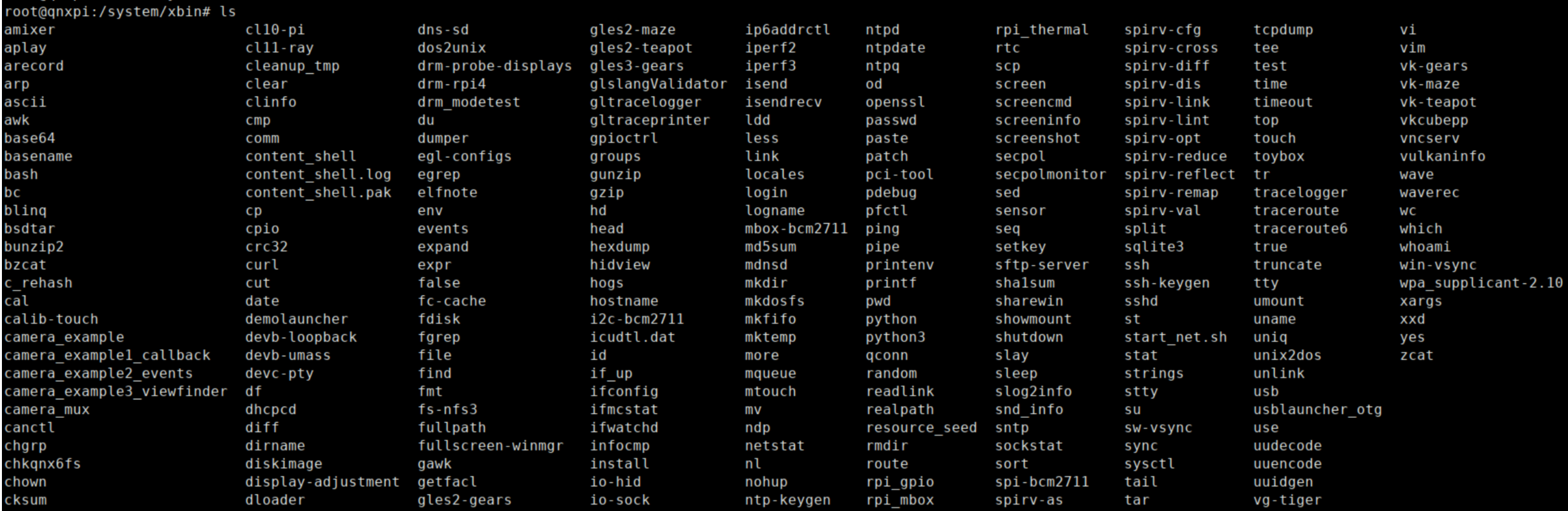

- [2025-01-04] A First Glance at Raspberry Pi 4 Running QNX 8.0

- [2024-12-29] New Laptop: Framework 16

- [2024-12-29] Utilizing Aliases and Interactive Mode to Force Users to Think Twice Before Deleting Files

- [2024-12-20] Stack Overflow: The Case of a Small Stack

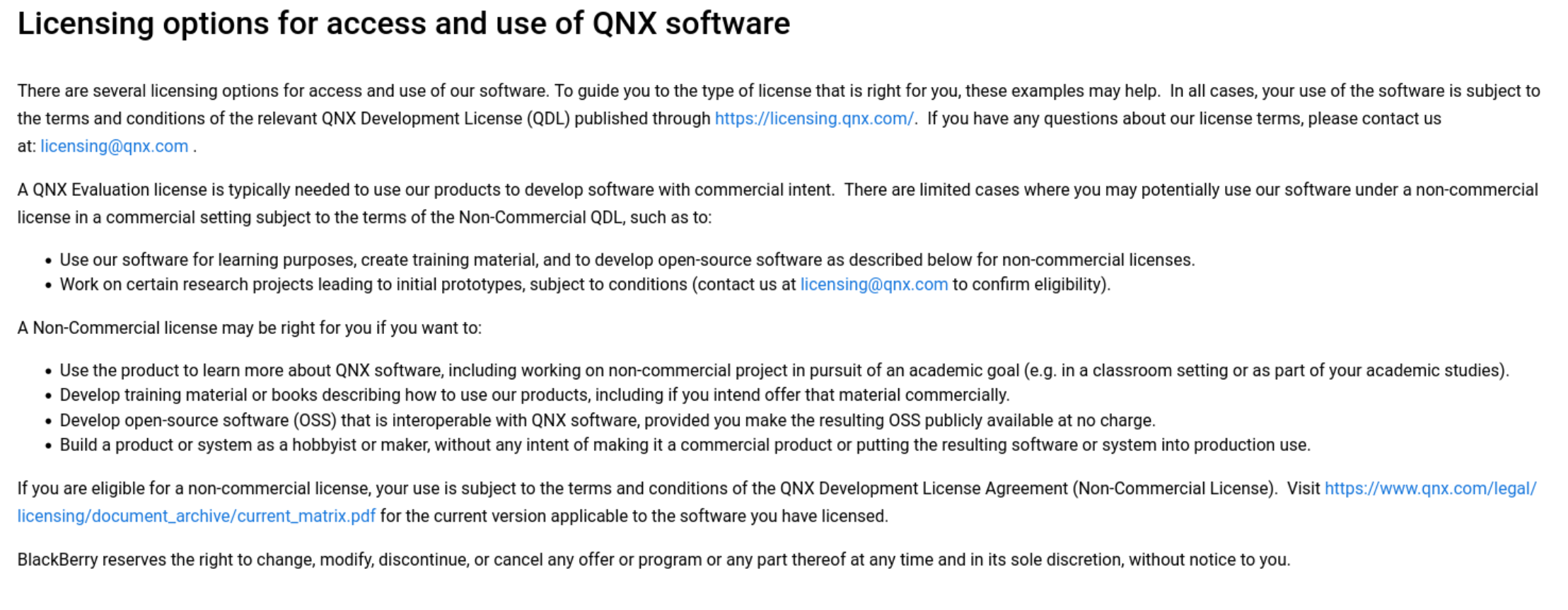

- [2024-11-09] QNX is 'Free' to Use

- [2024-10-08] [Preview] Manually Verifying an Email Signature

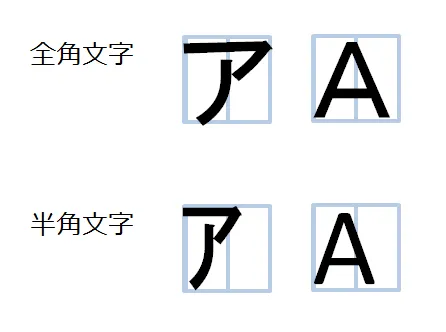

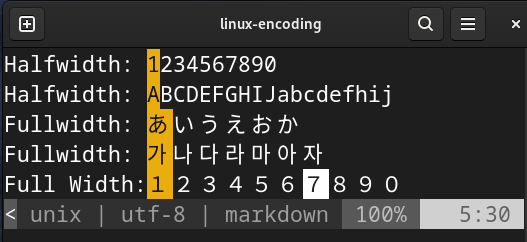

- [2024-10-06] [Preview] Half-Width and Full-Width Characters

- [2024-09-18] Mixing Number and String

- [2024-08-30] `.` At The End of a URL

- [2024-08-28] Splitting Pdfs into Even and Odd Pages

- [2024-08-28] Executing Script Loophole

- [2024-08-24] Replacing main()

- [2024-08-18] Editing GIFS and Creating 88x31 Buttons

- [2024-08-10] multiple definition of `variable` ... first defined here

- [2024-08-04] Delusional Dream of a OpenPower Framework Laptop

- [2024-08-04] 2024 Update

Singularity Rootkit

February 19, 2026

An interesting yet scary piece of software I read about on the weekly Linux news is Singularity, an open-source rootkit, which can hide itself from being detected, at least does a decent job at it. It hides its existence by removing itself from the list of active kernel modules, and also attempts to hide attacker-controlled processes, network communication, and related files. As it has kernel-level access, it can hook and intercept syscalls that could reveal files that singularity wishes to hide from.

Not totally related but this reminds me of a shared library exploit whereby the shared library intercepts the filesystem calls to readdir and getdents which is a neat trick. Though this is at a

userspace-level exploit and requires one to preload the malicious library (LD_PRELOAD).

The scary thing about this rootkit and any decent rootkits in general is its ability to hide itself. It is designed to hide itself thanks to it’s kernel-level privileges. It can intercept calls to various filesystem and network syscalls and APIs to conceal itself. This is why I am against the idea of allowing kernel-level anti-cheat code to be on my system. The idea of introducing a new level/ring in between userspace and kernel space or to introduce some new capabilities in userspace with controlled but limited and secured access to the kernel has been floated for years (though we do have eBPF which sort of functions like this). The 2024 Crowdstrike Incident for instance has compelled Microsoft to roll out a new security level to hopefully prevent this from ever occurring. Though it is not the issue of ensuring availability of the system that I am worried about, its the fact that we are placing trust to a non-open source third-party to have access to the kernel. Who knows what craziness they could do even if not intentionally (i.e. supply chain attacks).

Thankfully it would appear that this rootkit can be detected if observed at a third-party computer (i.e. examining the hard drive on another computer) or capture network traffic on a non-infected system. Fun fact, you can snoop harddrives without knowing their login as long as the drive is not encrypted. This was an eye-opener experience and made me think of my family desktops that we threw out over a decade ago.

On a weird note, Singularity also happens to be the name of an experimental microkernel OS by Microsoft that relied on software construction and memory safe language to ensure memory isolation and failure containment.

Digital Signatures on PDF

January 17, 2026

Recently, I came across my electronic copy of my degree and transcript from several years ago and remembered that it was digitally signed. I previously wrote about how to manually verify an email signature 2 years ago and that got me thinking: how can I verify the signature of my degree and transcript to ensure that it has indeed come from my University and that it has not been tampered with?

Note: When referring to digital signatures on PDFs, I am not referring to e-signature i.e. the graphical signature that contains a name for instance

For this post, I won’t go through the nitty-gritty details this time, and instead I will use various tools to do the work for me. In other words, this is not a technical post on digital signatures.

Digitally signing documents is a brilliant idea and I wished the France Éducation International did the same for their DELF/DALF exams. They used to email PDF results of the exam till the embassy and the organization later directed test centers to prioritize in-person collection:

We used to send result certificates by email in PDF format. However, the management center at the Embassy and France Éducation international

now recommend prioritizing in-person collection to reduce the risk of fraud, which has become increasingly common.

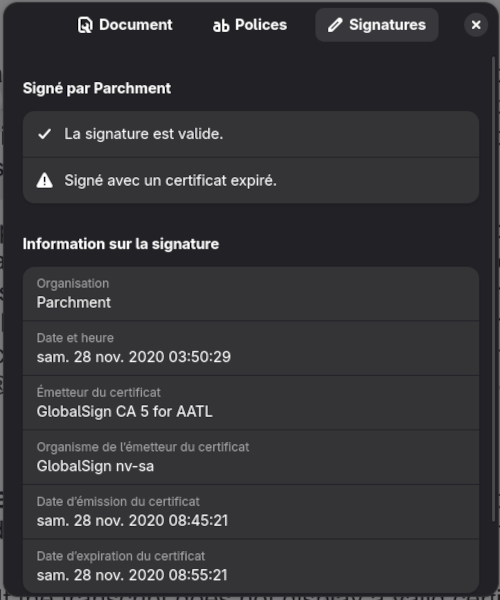

Here’s a sample of attached to my transcript:

Each page also contains a footer indicating that the document is digitall signed and encourages recipients to perform their due diligence to ensure that it has not been tampered with:

THIS TRANSCRIPT HAS BEEN DIGITALLY SIGNED AND CERTIFIED BY THE UNIVERSITY OF <REDACTED>. IT IS OFFICIAL

ONLY WHEN BEARING THE BLUE RIBBON SYMBOL, AND A VALID CERTIFICATE ISSUED BY GLOBALSIGN CA FOR ADOBE

While I do not own a Windows machine to verify this through Adobe Reader, I can confirm that the document indeed display a Blue Ribbon on my work laptop. For us Linux users, there are plenty of

options to verify the authenticity of the document itself such as through GNOME Document Reader or via command-line using pdfsig:

$ pdfsig etranscript.pdf

Digital Signature Info of: etranscript.pdf

Signature #1:

- Signature Field Name: ParchmentSig1

- Signer Certificate Common Name: Parchment

- Signer full Distinguished Name: CN=Parchment,O=Parchment,C=US

- Signing Time: Nov 28 2020 03:50:29

- Signing Hash Algorithm: SHA-256

- Signature Type: adbe.pkcs7.detached

- Signed Ranges: [0 - 185], [16571 - 699076]

- Not total document signed

- Signature Validation: Signature is Valid.

- Certificate Validation: Certificate has Expired

However, unlike GNOME Document Reader, pdfsig does not provide when the certificate expires and whichi certificate authority issued it.

To obtain ths information, we’ll first need to extract the signature from the PDF using the -dump option:

$ pdfsig -dump ./etranscript.pdf

Dumping Signatures: 1

Signature #0 (8192 bytes) => etranscript.pdf.sig0

We can then use openssl to extract the certificates from the signature:

$ openssl pkcs7 -inform DER -in ./etranscript.pdf.sig0 -print_certs -out etranscript.pem

$ openssl x509 -in ./etranscript.pem -text -noout

Certificate:

Data:

Version: 3 (0x2)

Serial Number:

01:c8:e8:dc:70:35:44:3b:ae:fd:f2:20:5a:ce:09:b6

Signature Algorithm: sha256WithRSAEncryption

Issuer: C=BE, O=GlobalSign nv-sa, CN=GlobalSign CA 5 for AATL

Validity

Not Before: Nov 28 08:45:21 2020 GMT

Not After : Nov 28 08:55:21 2020 GMT

Subject: C=US, O=Parchment, CN=Parchment

Subject Public Key Info:

Public Key Algorithm: rsaEncryption

Public-Key: (2048 bit)

Modulus:

Side Note: One can observe that the certificate expired within 10 minutes of its creation and is likely to minimize potential risks.

Verify Hash of Document

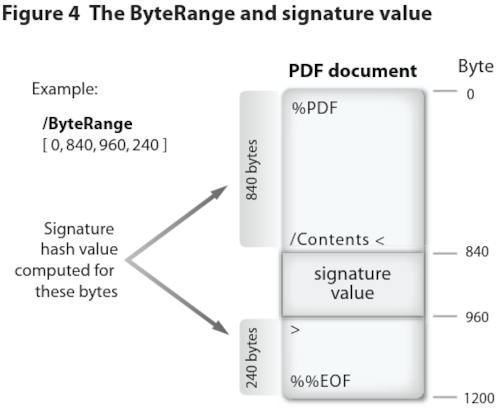

From what I can observe, it seems to be a common practice to embed the digital signature directly into the document itself rather than storing it in a separate file as shown below:

Extracted from Adobe - Digital Signatures in a PDF

During the signing process of a document, the software would typically hashes the document before using the private key of the signer to encrypt the resulting hash value. This signature is then typically provided to the receipient as a separate file. However, for PDFs that embed the signature, the process will differ as the software cannot simply just hash the document and then insert the signature arbitrarily as doing so will break the document.

Instead, the PDF will reserve a region within the document to fit the signature value and some related metadata. One such piece of metadata is the ByteRange entry to indicate exactly

what portions of the file is included in the signature as seen in the image above. For instance, ByteRange [0, 840, 960, 240] are simply pairs of offset in the file and its length:

- 0, 840:

- Offset: 0B

- Size: 840B

- 960, 240:

- Offset: 960B

- Size: 240B

In the case of my transcript, pdfsig indicated that the signed ranges of the document was: [0 - 185], [16571 - 699076].

To verify the signature indeed only verifies the indicated bytes, we can reconstruct the signed content by removing the embedded signature byte:

# Remove the digital signature from the document

dd if=./etranscript.pdf of=chunk1.bin bs=1 count=185 skip=0

dd if=etranscript.pdf of=chunk2.bin bs=1 count=682505 skip=16571

cat chunk1.bin chunk2.bin > reduced-transcript2.bin

We can now observe that approximately 37KiB of data corresponding to the unsigned content has been removed:

$ stat --printf="%s\n" etranscript.pdf

720645

$ stat --printf="%s\n" reduced-etranscript2.bin

682690

At this point, we should be able to verify the signature against the reconstructed document:

$ openssl cms -verify -binary -inform DER -in etranscript.pdf.sig0 -content reduced-etranscript2.bin -noverify | grep success

CMS Verification successful

This confirms that the signature has indeed only signed the specified bytes indicated by pdfsig. An important note, this does not

establish trust in the signer as I omitted the certificate check.

Based on my research and consulting with friends who have graduated, it does not seem my current institution will provide me a digitally signed degree when I graduate in 2027. This news is a bit disappointing

but unsurprising. Digital signed PDFs is still a piece of technology that is not widespread unlike HTTPS with their SSL/TLS certificates to cryptographically prove the identity of the webserver. With the

widespread impersonnation and fraud, I think it would be of the best interest for my institution to adopt digitally signing documents. They instead encourage employers to use AuraData which

provides risk prevention in the number one area of résumé fraud, post-secondary/professional designation education claims.

Any employer should do their due diligence and it would seem AuraData is a solution that works as evident from Case 989 where AuraData were mentioned in this tribunal case. In this case, a student fraudently stated they graduated from the university in 2015 and provided a copy of their degree which AuraData tried to verify. As this was supposedly issued in 2015, this was before Parchment Award existed and therefore probably before the university in question adopted this system. Therefore as the document was not digitally signed, AuraData did their due diligence and contacted the university to verify the student’s graduation which one can do on the university’s website for a fee of $22.50 CAD. But if the document had been digitally signed, AuraData would have been able to report to their client that the document is authentic. Of course, this is not foolproof if the student shares the same name as another graduate.

Interestingly enough, I have not been provided digitally signed letters of my employment status at the various companies I have worked at. I guess we got to make our security background check providers do something. On another note, it seems like Parchment, the provider of my digitally signed transcript and degree, is not a well liked platform. I got no complaints as I was given the two for free of charge upon graduation along with the physical copy of my degree and I never had to use their service to submit my documents to other institutions.

2025 - The End of Two OS

January 9, 2026

December 31st marked the end of

HP-UX. Apparently released in 1982, it was mainly used in

the enterprise world from what I know. I do not have much fond memories of this platform due to my

work compiling (building) security fixes (PSIRT - Product Security Incident Response) when I was at

IBM

many years ago, though no fault to HPUX itself. At IBM Db2, we called HPUX builds hpipf32 and hpipf64

as evident if you were to look at the link to any HPUX Db2 security patch: fixids=special_38387_DB2-hpipf64-universal_fixpack-9.7.0.11-FP011.

Though for some reason, I recalled calling it ia32 and ia64 as well for Intel Architecture 32 and 64 bits respectively. Though it’s been years

since I worked there so I probably am remembering specifics and other internal names incorrectly. Regardless, what was unique about HP-UX was

its adoption of Intanium processors, one of Intel’s 64-bit architecture (yes there’s other 64-bit architecture from Intel that exists but were

not released to the public). To learn more about Intanium, you can watch a video from Retrobytes on this.

It would seem that its death could have been related to the end of Intel’s production of the chip back in 2021.

Though it is not clear to me who pulled the plug but I assume it was Intel since they only had one major customer, HPE. Anyhow, according to a document from HPE,

HPE will still continue supporting HP-UX till December 31 2028:

Mature Software Product Support without Sustaining Engineering through at least 31-Dec-2028

I guess Intanium users will have to jump ship to Linux or replace their entire cluster with another architecture eventually. A former intern of mine cannot wait for IBM to drop support for Db2 Kepler and therefore Intanium but who knows when that will be.

Windows 10 also reached end of life on October 14, 2025 though one can continue getting support if they enroll in extended security support.

The death of two OS whose users will have to pivot to Linux if they cannot upgrade their hardware (unless you are one of those users with TPM 2.0 resisting the upgrade to Windows 11 reminiscent to how there are still XP and Windows 7 users in December 2025).

New Year, New Desktop

January 8, 2026

A new year, a new computer, and a new experience, building my first desktop. It’s only been a year since I got a Framework laptop which is already sufficent for my current needs so there wasn’t any good reason to get a new computer. While most think GPUs are only good for gaming and more recently for running your own local LLM, it can be used for more interesting things, compute such as numerical analysis and simulations. Not that I’m sufficiently motivated to do so myself. Perhaps I’ll write my final undergraduate Math paper on numerical analysis and simulations, but only time will tell.

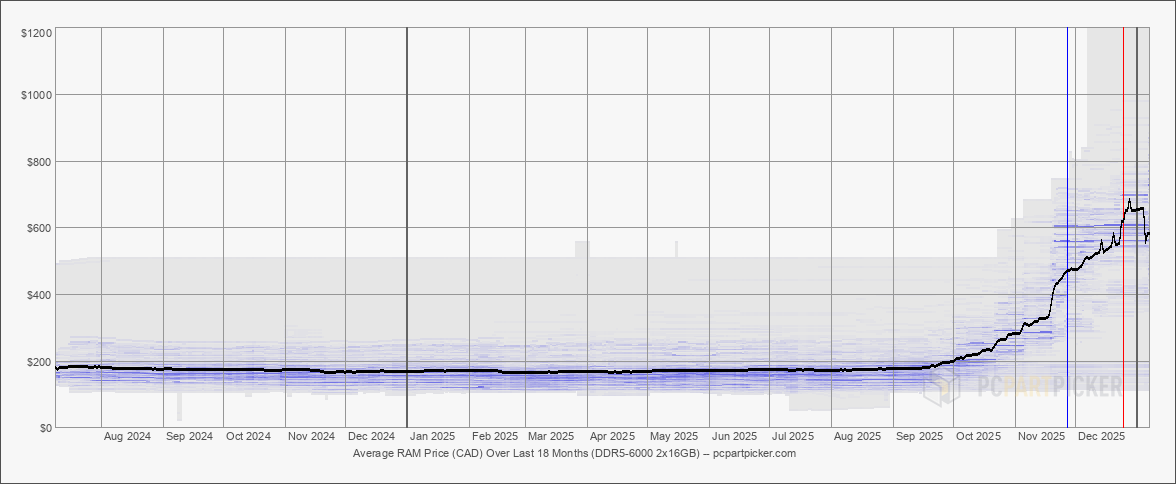

2026 is not the most ideal year to build a desktop, especially due to the rising RAM costs we have been experiencing since late September:

Source: PC Parts - Amount in Canadian Dollars

But alas, I already started buying PC components in late summer so I decided to take the hit and buy one of the few last batches (at least in 2025) of RAM that Crucial will ever provide as the parent company Micron decided to exit the market to focus on building HBM for the AI & Datacenter market. If you receive RAM from Crucial in February, you truly have the last few batches that’ll ever be manufactured.

Not including our 13% tax, the total came up to \$2346.54 CAD, an enormous sum for a student like myself. I did get receive an employee discount of \$134.92 CAD which did help but the amount was way less than what I have initially expected (i.e. $300 USD).

As this was my first build, I ended up falling for a lot of rookie mistakes which includes:

- Forgetting to peel off the sticker from the CPU cooler before placing it on top of the thermal paste

- Having my screwdriver shaft fall down inside a narrow part of the PC case which caused me needing to unscrew the fans built-into the case to remove it

- my screwdriver has a detachable shaft to make the driver shorter or taller

- Downloading the wrong bios: B650M RS PRO v.s. B650 RS PRO WIFI

- Plugging the display cable from the motherboard IO instead of the GPU IO

- Not plugging enough PCI-E cables between the GPU and Power Supply

- This explained why the GPU was not being detected originally and would have been missed if I didn’t try to perform some benchmarking

Specs and Performance

$ fastfetch

.',;::::;,'. zaku@fedora

.';:cccccccccccc:;,. -----------

.;cccccccccccccccccccccc;. OS: Fedora Linux 43 (Workstation Edition) x86_64

.:cccccccccccccccccccccccccc:. Host: B650M Pro RS WiFi

.;ccccccccccccc;.:dddl:.;ccccccc;. Kernel: Linux 6.17.12-300.fc43.x86_64

.:ccccccccccccc;OWMKOOXMWd;ccccccc:. Uptime: 2 hours, 2 mins

.:ccccccccccccc;KMMc;cc;xMMc;ccccccc:. Packages: 2517 (rpm)

,cccccccccccccc;MMM.;cc;;WW:;cccccccc, Shell: bash 5.3.0

:cccccccccccccc;MMM.;cccccccccccccccc: Display (BenQ GW2480): 1920x1080 @ 60 Hz in 24" [External]

:ccccccc;oxOOOo;MMM000k.;cccccccccccc: DE: GNOME 49.2

cccccc;0MMKxdd:;MMMkddc.;cccccccccccc; WM: Mutter (Wayland)

ccccc;XMO';cccc;MMM.;cccccccccccccccc' WM Theme: Adwaita

ccccc;MMo;ccccc;MMW.;ccccccccccccccc; Theme: Adwaita [GTK2/3/4]

ccccc;0MNc.ccc.xMMd;ccccccccccccccc; Icons: Adwaita [GTK2/3/4]

cccccc;dNMWXXXWM0:;cccccccccccccc:, Font: Adwaita Sans (11pt) [GTK2/3/4]

cccccccc;.:odl:.;cccccccccccccc:,. Cursor: Adwaita (24px)

ccccccccccccccccccccccccccccc:'. Terminal: Ptyxis 49.2

:ccccccccccccccccccccccc:;,.. Terminal Font: Adwaita Mono (11pt)

':cccccccccccccccc::;,. CPU: AMD Ryzen 7 9700X (16) @ 5.58 GHz

GPU: AMD Radeon RX 9070 XT [Discrete]

Memory: 3.67 GiB / 30.92 GiB (12%)

Swap: 0 B / 8.00 GiB (0%)

Disk (/): 17.12 GiB / 928.91 GiB (2%) - btrfs

Local IP (wlp6s0):

Locale: fr_FR.UTF-8

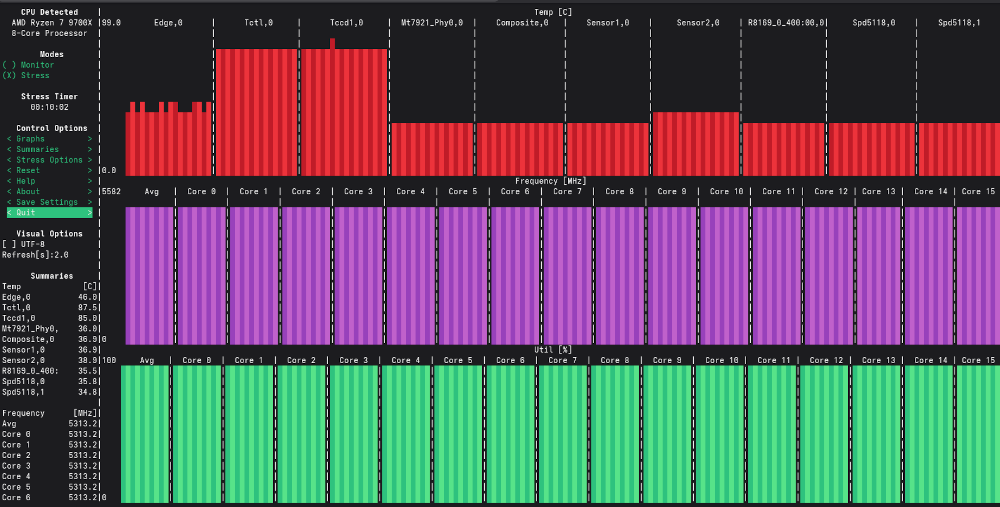

s-tui output - don't bother straining your eyes to read the values

The readings below are just a snapshot @ 11:37 ran on a different date from the image above:

- Tctl: 88.4

- Tccd: 86.1°C

- Core Frequency Avg: 5283.2MHz

Based on running s-tui CPU stress test for 10+ minutes, the system maintained temperatures below 90°C from what I have observed (though I haven’t kept an eye on it for all 10+ minutes) indicating the cooling and thermal paste appear to be working as intended. For Ryzen 7 9700X, the maximum operating temperature (Tjmax) is 95°C which my system is comfortably operating below even under sustained stress workloads. I am specifically tracking Tccd1 (the temperature associated with the physical Core Complex Die) rather than Tctl, as Tctl is a control value used for fan scaling or whatever cooling system used as stated in the K10temp Linux Kernel Driver:

Tctl is the processor temperature control value, used by the platform to

control cooling systems. Tctl is a non-physical temperature on an

arbitrary scale measured in degrees. It does _not_ represent an actual

physical temperature like die or case temperature. Instead, it specifies

the processor temperature relative to the point at which the system must

supply the maximum cooling for the processor's specified maximum case

temperature and maximum thermal power dissipation.

CCD is also another AMD terminology for Core Complex Die which you can read more about in this article or in this whitepaper. But essentially for Zen 5 architecture, you can think of a CCD as a collection of CPU cores with a shared L3 cache.

With an average clock rate of 5283.2MHz, the CPU was reaching close to its theoretical peak of 5.5GHz as expected. For context, the base clock speed is advertised to be 3.8GHz.

As the RAM was advertised to go 6000MHz (6000MT/s), I enabled EXPO (XMP) on the bios to utilise the full advertised speed instead of the default slower speed.

$ sudo dmidecode --type 17 | grep -E "Configured Memory Speed"

Configured Memory Speed: 6000 MT/s

Configured Memory Speed: 6000 MT/s

Here are the blender results:

| Device | Monster | Junkshop | Classroom |

| Radeon 9070xt (dGPU) | 1607.56 | 790.52 | 711.39 |

| Ryzen 7 9700X (CPU) | 173.95 | 118.89 | 87.12 |

A significant improvement compared to my Framework laptop:

| Device | Monster | Junkshop | Classroom |

| Radeon RX 7700S (dGPU) | 416.25 | 222.96 | 188.13 |

| Radeon 780M (iGPU) | 143.59 | 77.86 | 67.42 |

| Ryzen 9 7940HS (CPU) | 107.62 | 71.33 | 52.52 |

Why not X3D CPU?

The decision was mainly due to pricing, RAM prices doubled than what I allocated in my budget which I did slightly exceeded by a few Canadian dollars. An X3D chip would have costed an extra few hundred Canadian dollars and considering I do not play videogames, there was little reason to do so. With RAM prices doubling, an X3D chip would probably help alleviate the lack of memory in your system as it “hides” RAM latency due to its large L3 cache. But that is also another reason why I avoided the X3D chip which may sound counter-intuitive. With its abnormally large L3 cache for a desktop CPU, I felt that it would not be good for algorithm performance analysis as it reduces trips to the main memory which will diminish the effect of a poorly designed memory-bound algorithm.

The Unintended Consequence of Replacing CRLF (\r\n) to LF (\n) on PNG Images

December 11, 2025

Today there was a PR at work that tried to address the issue of engineers submitting code with DOS (Window) end of line (EOL) to the codebase.

Background (Skip if you are a Programmer)

\r and \n are what we call control characters,

non-printable characters that have effects to data and devices. For instance, \a will ring a bell, \n (line feed) will cause the cursor to go to the next line and \r (carriage return)

causes the cursor to return to the first character in the same line.

DOS (Microsoft’s text-based OS) uses \r\n to indicate an end of line (which we commonly refer to as CRLF) meanwhile, UNIX and its variants and sucessors use \n to indicate the end of file (commonly

refer to as LF or newline in layman terms). CRLF notation makes more sense from a historical point of view (i.e. teletype and typewriters) but it also takes unnecessarily an extra character.

Anyhow, long story short, I noticed that the PR and subsequent PRs relating to convert DOS to UNIX file format broke PNG since the first 8 Bytes of all PNG (the header, also called the magic number)

contains 0D 0A (CR LF):

89 50 4E 47 0D 0A 1A 0A

Use xxd or hexdump on any PNG file and you will discover this yourself..

$ convert -size 32x32 xc:white empty.png

$ xxd -l 8 empty.png | grep 0d0a

00000000: 8950 4e47 0d0a 1a0a .PNG....

Converting to UNIX style causes the PNG file to no longer be an image:

$ file empty.png

empty.png: PNG image data, 32 x 32, 1-bit grayscale, non-interlaced

$ dos2unix -f empty.png

dos2unix: conversion du fichier empty.png au format Unix…

$ file empty.png

empty.png: data

Braille in Pokémon Sapphire and Ruby Game and Manga

November 23, 2025

Pokémon Crystal was the first to introduce symbols or glyphs for players to decode according to my research. [1] Thankfully the Unknowns ressemble the English or the latin alphabet so the task to decode the ruins was feasible for children. However, in generation III, in the Hoenn region, decoding the ruins has gotten much more tricker with their use of Braille.

Braille is for the blind and the visual impaired and therefore would likely not be able to play the game. So the task of decoding the ruins would be a great challenge.

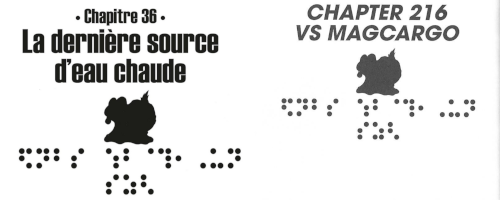

Reading the manga, Pokémon: La Grande Aventure - Rubis et Saphir, there’s a single black page with random white dots. Confused I did some research and found out it was braille.

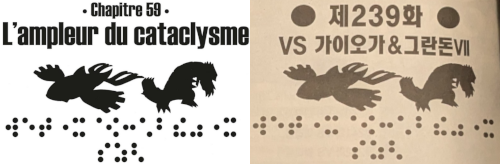

Random Braille at the end of chapter 21 (or 201)

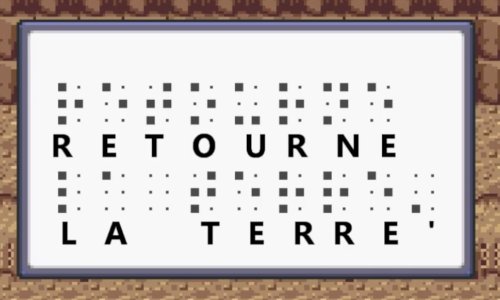

Since then, I noticed that each chapter would contain braille. This got me curious as to what it was. However, I had no success till I realized the braille was not localized and remained in Japanese braille. The game were localised though:

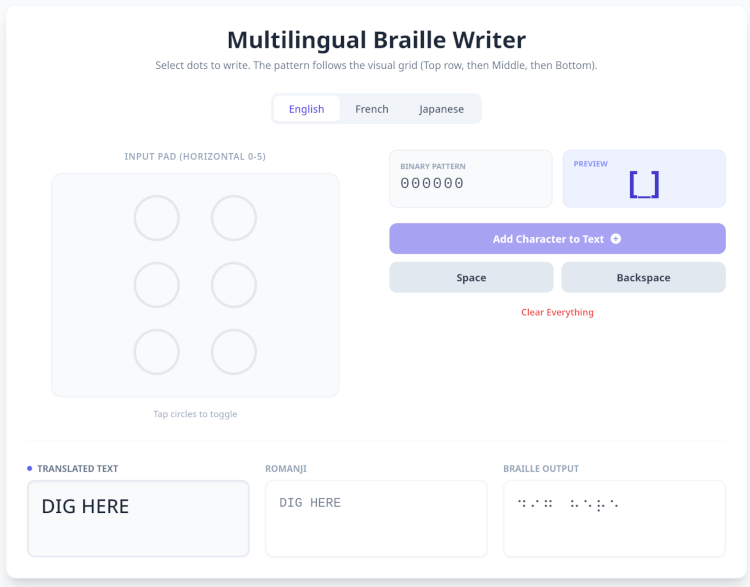

WARNING: I know the neocities community has a distaste for AI but it has been used to aid in the translation

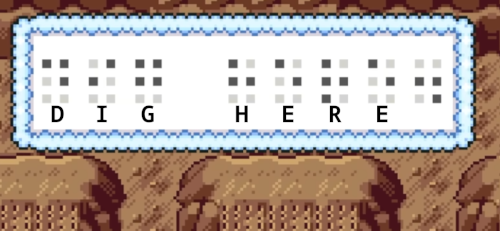

Through the power of vibe-coding on Gemini, I was able to create a primitive translator:

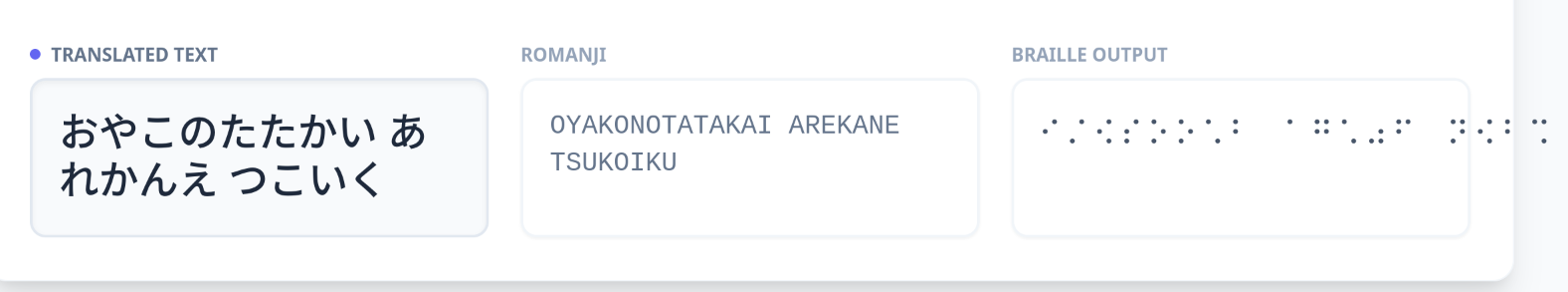

Screenshot from a vibe-coded braille translator

This tool comes in handy for Japanese as it’s a language I am unfamiliar with and hence why I needed this braille translator.

Japanese braille to Kana support

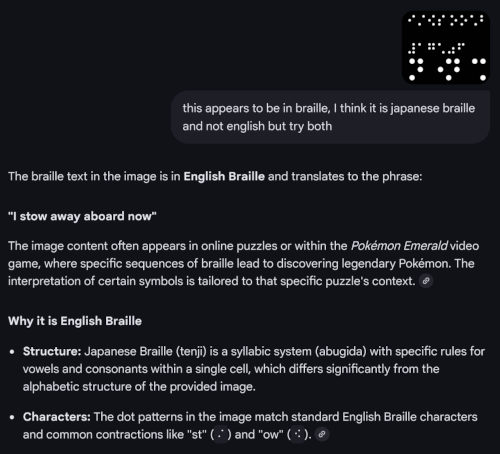

Failed Attempts to use AI to translate braille

AI (Gemini and ChatGPT) fails to translate Japanese braille into English. I provided both LLMs the braille in unicode and a screenshot of the braille and the results were disappointing. Gemini was convinced the braille was in English meanwhile Chatgpt faired better recognizing it was not possible for the braille to English. Furthermore, ChatGPT stated it could not infer the message from the unicode but when provided with an image of the braille, it incorrectly translate the message to `ガラスのつばさ` or `Wings of Glass`. As you may notice, the word appears to be shorter than what it should be, that i is because ChatGPT deleted them by justifying they weren't standard Japanese words.

The resulting kana that was translatable on the vibe-coded translator was: おやこのたたかい あれかんえ つこいく

I did skip one braille pattern during the translation as the vibe-coded translator did not recognise what it was so it’s not a direct translation but it should be good enough for my use case.

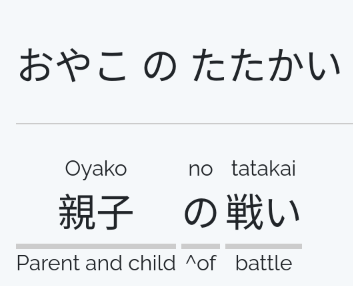

The English translation is Parent and child battles. One important note about this page, this page appears at the end of volume 16 which I did not realize since the French translation is in an omnibus (i.e. multiple volumes are packed into one large collection).

According to Bulbpedia is titled 親子の戦い or in English Battle Between Father and Son.

Translation provided by j-talk

In each chapter, there is a corresponding braille which Bulbapedia conveniently provides.

It was only after I gave up trying to decode and translate the braille, that I realized there was no relationship between the titles in both English (ChunYi) and French editions.

In a few weeks, I will be visiting my family so I should be able to determine if this is the case with the Korean edition as well since I do have a copy of the manga.

In each chapter, there is a corresponding braille which Bulbapedia conveniently provides.

It was only after I gave up trying to decode and translate the braille, that I realized there was no relationship between the titles in both English (ChunYi) and French editions.

In a few weeks, I will be visiting my family so I should be able to determine if this is the case with the Korean edition as well since I do have a copy of the manga.

Note: Some editions of the English translations apparently has better subtitles that better reflects the braille:

It is subtitled Restarting (Japanese: 決意の再出発 Deciding to Restart) in the VIZ Media translation and Setting Off Again in the Chuang Yi translation.

Description from bulbapedia

The vibe-coded braille to kana translator outputted: けつい の さい す゛ はつ のまき which when plugging into Chatgpt gives the following after prompting it to translate it into

English that is natural sounding:

-

“The Chapter of Determination” (most natural, common in manga)

決意の巻(けついのまき)

-

“The Beginning of Determination”

決意の初(はつ)めの巻

-

“The Birth of Determination”

決意の発(はつ)章

-

“The Size of Determination” (literal but natural)

If you actually want “size” (サイズ):

決意のサイズの巻

-

“Determination: First Chapter”

決意・第一章(だいいっしょう)

The actual answer is the following:

or officially:

- VIZ:

Mixing It Up with Magcargo - Restarting - ChuangYi:

VS Magcargo - Setting Off Again - Original:

VS マグカルゴ - 決意の再出発

Conclusion

The mysterious braille that the author dedicated an entire page to was simply Parent and child battles. Each chapter subtitle (if it exists in your edition) has a corresponding braille counterpart.

The braille in the English and French translation has not been localised.

Update: 2026-01-01

Chapter 239 title page in French and Korean

As I suspected, the Korean translation did not bother localizing the braille.

As a bonus, here are some questionable translations I found thus far: examples of mixed speech:

There are more in Chapter 233 but I figured there could be some cultural context I am missing as an anglophone so I shall refrain from stating it’s a mistranslation.

At the time of writing, I thought it was a potential typo or there was some cultural aspect I was missing to not understand why there were a lot of English words being mixed into Wallace (Marc)’s speech. However upon starting Pokémon Diamond and Perl, another coordinator who happens to be also a gym leader employs a heavy usage of English in her speech. It was so frequent that Diamond even makes a comment on it:

Diamant: J’ai bien peur qu’elle ne réussisse pas à relever le défi, en plus on comprend à moitié ce qu’elle dit

Perle: Oh, tu ne comprends pas l’english?

Chapter 231 (Chapter 34)

It appears that Kiméra is well known for this speech pattern where she employs a mix of different European languages in the Japanese version but I cannot confirm this.

Update (2026-02-22): Explanation why Wallace/Marc uses a lot of English words in his speech

[1] Based on bulbapedia and from my personal recollections, there were no messages to decode in the original Gold and Silver editions

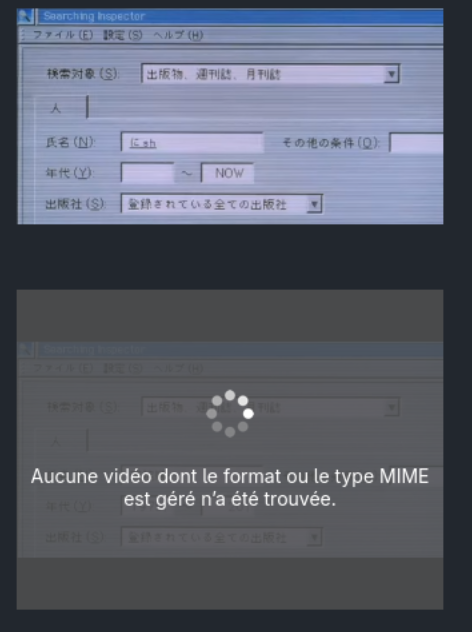

Firefox - MP4 Cannot be Decoded

November 21, 2025

One constant issue I have with Firefox is the instability of playing videos outside of Youtube. Often when watching anime online, I would often encounter issues where skipping even a second would cause the video to stop playing. Hence why I have mention in my FAQ that I use Brave, a privacy-focused chromium browser, to stream anime.

While making my gallery featuring retro tech on neocities, I encountered a similar issue. Firefox was unable to loop a MP4 video I uploaded. Though Brave unsurprisingly could.

The simple solution to the problem was to convert the MP4 video into webm, an open and royalty-free media format which is the image you see on the top meanwhile the video on the bottom was encoded in HEVC (H.265) via ffmpeg (not ffmpeg-free).

I was under the impression that Firefox supported HEVC but it turns out there are only limited support.

Based on the Error displayed on the video and Firefox complaining decoding error on the Web conole, I knew Firefox was missing some type of decoder to play my MP4 file.

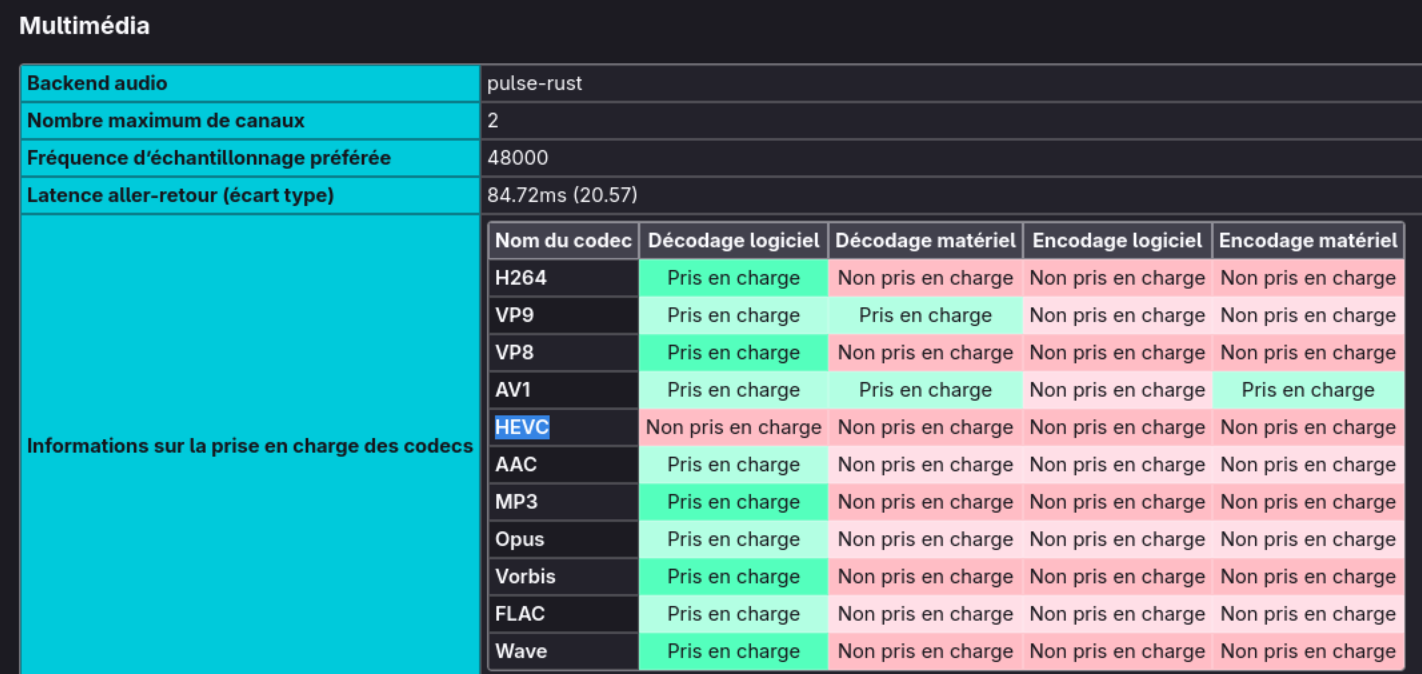

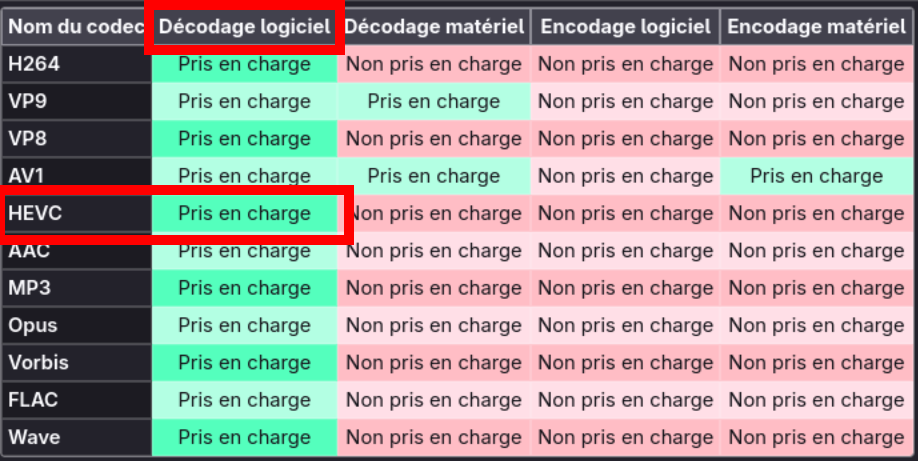

Placing about:support#media on the Firefox address bar reveals everything I needed to know:

Despite having HEVC en/de-coders on my Linux system, I needed to enabled them via Firefox configuration (about:config):

media.hevc.enableddom.media.webcodecs.h265.enabled

However, I opted to convert my MP4 file into webm to open access to all visitors.

HEVC is a patented code which based on Wikipedia only waives royalties on software-only implementations (and cannot be bundled with hardware).

Note: “Prise en charge” is “Supported” and “Décodage logiciel” is “Software Decoder” in English

UPDATE: The day I published this blog, I woke up to see an article about HVEC popping in my hackernews feed: HP and Dell disable HEVC support built into their laptops’ CPUs . Originally I was under the impression that CPU manufacturers such as AMD and Intel would be responsible to pay those fees but according to Ars Technica, it is not known if they indeed do. Tom’s Hardware reveals that chipmakers (at least in the GPU side) do have to pay a license fee to implement the feature in silicon. But it also reveals that to enable hardware decoding on the device leve, OEMs must also pay the fee. Therefore it would seem that HP and DELL will be disabling this capability on the software side (either on the driver or fireware level) if this logic applies for CPU as well. Considering the volume of CPUs DELL and HP purchase from AMD and Intel, I do think it could be possible for them to also request to fuse the capability off in silicon (though unlikely). As Tom’s Hardware notes, this is typically not done on the GPU so if we assume the same logic applies to CPUs, it is likely disabled on the software side.

Note: Not a support on Neocities so the video is hosted on codeberg

Anime and Manga Tech Gallery

November 18, 2025

For years, I always wanted to create a webpage with a collection of images from manga and anime that featured code or computers. I did amass a large collection but loss many of them over the years and the ones I do still have, I no longer remember where it is from.

You can view the galllery under /galllery

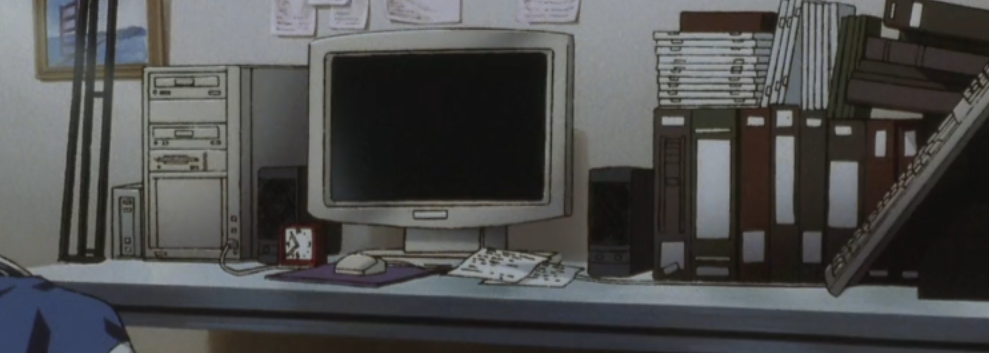

Watching Patlabor Movie 3 (ya I know it’s not good), I decided to start a new collection from scratch. Here are some notable snippets:

The movie was released in 2002, around the year my family got our first set of desktops that I grew up with. As typical for this time period, the desktop features a CD drive (not DVD) and a floppy disk drive.

From a side angle, it becomes clear that the mouse connector is likely a PS/2 connector and not USB (also typical during this timeframe).

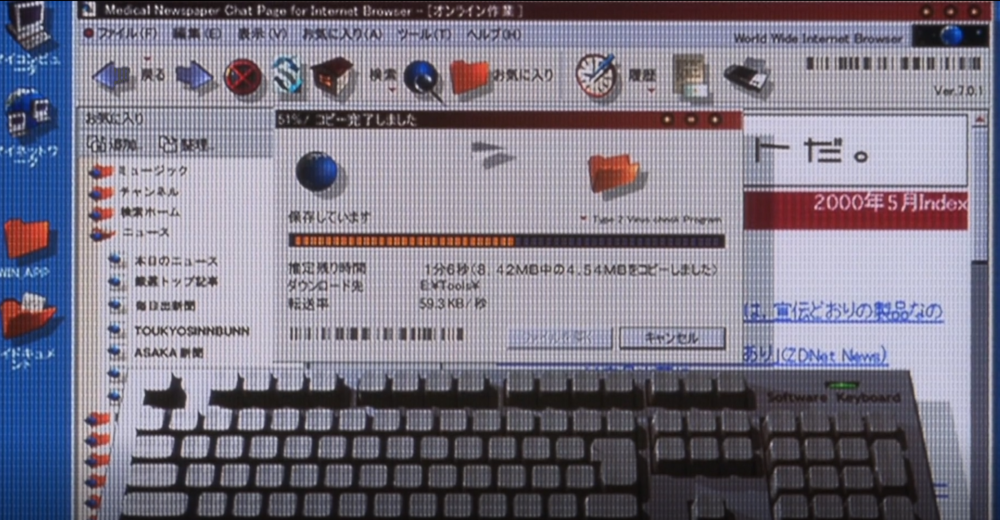

A retro view of downloading a file from the internet back in the day. The blocky windows, the use of large icons and download animations suggests that this is featuring Windows 95 or Windows Me. Though the circular window options (e.g. the minimize, expand, and close buttons) being circular makes it hard to tell the browser being feature. It could be Netscape or Internet Explorer (IE). I am going for Internet Explorer due to the fact that Netspace popularity severely declined in the late 90s. The version number does not match IE version at time the movie was released. It would have been likely IE 4 or IE 5 that is being featured but perhaps there was a Japanese web browser during this time period that I am not aware of that would better match this.

A feature now lost in time, the iconic zoom magnifying glass. I am not sure when web browsers started to phase out this feature, I totally forgot it even existed. Looking at demos for Internet Explorer 6, the picture resizing tool is quite different from what I recalled. Perhaps this feature died off much earlier than I thought.

Bonus: I found a nice webpage that features the looks of various softwares at different period of time.

The style of placing the monitor above the horizontal desktop tower is definitely the product of the 80s or the early 90s. Monitors in the were typically fat back then, even during the early 2000s as LCD displays were expensive compared to their CRT counterparts.

Bonus: Japanese Computers

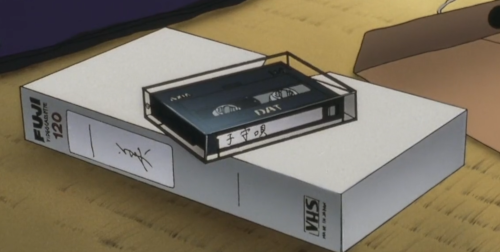

A classical VHS cover along with an audio casette tape that were popular in the 80s and the 90s. Not sure if it’s the art, but I recall casette tapes being much slimmer than portrayed in the anime. Perhaps this is not an audio casette but rather a compact VHS (VHS-C used in camcorders. I simply assumed it was an audio casette tape since there would be no need for a VHS and it is later featured for audio usage in the movie. Though I could have recalled this incorrectly.

Note: My memory is very fuzzy when it comes to tech from the 80s and 90s as I grew up in the 2000s so I only had limited interaction with these technologies.

This is from the webtoon, I Became the Villain the Hero Is Obsessed With, featuring the output of the top command.

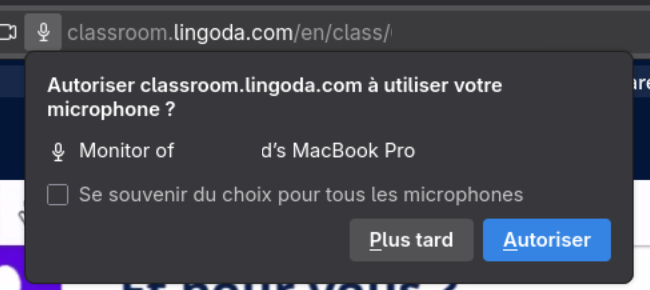

Fedora 43: Random Request to Access Macbook Pro Microphone

November 18, 2025

Fedora 43 was released in October 28 and my only gripe against this update is their inclusion of ROAP, some feature to connect to Apple devices. It was honestly annoying because my microphone would stop working once the video platform demands if I wish to connect to someone’s Macbook nearby. And this happens often during classes so I often had to re-enter the room to resolve my microphone issue.

Within my audio settings in the quick access menu on the top right of GNOME Desktop shows various Apple devices that can be used for audio output:

It is not clear to me if Fedora purposely included this Pipewire module or it came packaged in the Pipewire version they selected. Regardless, it’s plain annoying. While not the same, others appear

to have encountered the same issues. Following another approach from Reddit,

I at least have gotten their weird Microphone request prompts. Though I can still Apple devices as potential audio outputs, I can live with Apple polluting my audio settings. The solution is to delete

the offending package pipewire-config-roap

Random Photos

November 14, 2025

Nothing technical, just some random photos I took that I found on my phone.

It snowed recently in the city I currently moved into. Though it quickly melted. It’s definitely a lot warmer here compared to where I am from.

Disassembling my laptop because I spilled coffee over it … nothing got damaged thankfully. Though if any components got damaged, it would be easy to repair (#Framework). Reminds me how I once destroyed my Lenovo P50 workstation years ago … the company did not give me an expensive laptop after that incident.

While I don’t use my pull-up bar as much as I should, it makes a good dry rack.

Snow in my “home city”, apparently this was in April based on the name of the image … the first real winter in a while. Winters in Canada, at least in my homecity, has been quite warm over the past few years unfortunately. Hopefully, we will see some normal winter this year.

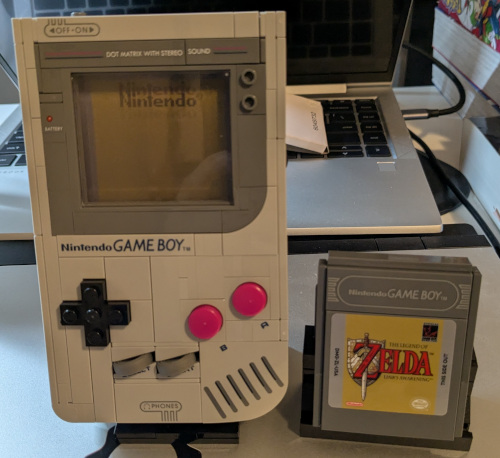

I spoiled myself last month with Lego. I have not touched lego in over 15 years … I sure am old … The kit impressed me a lot.

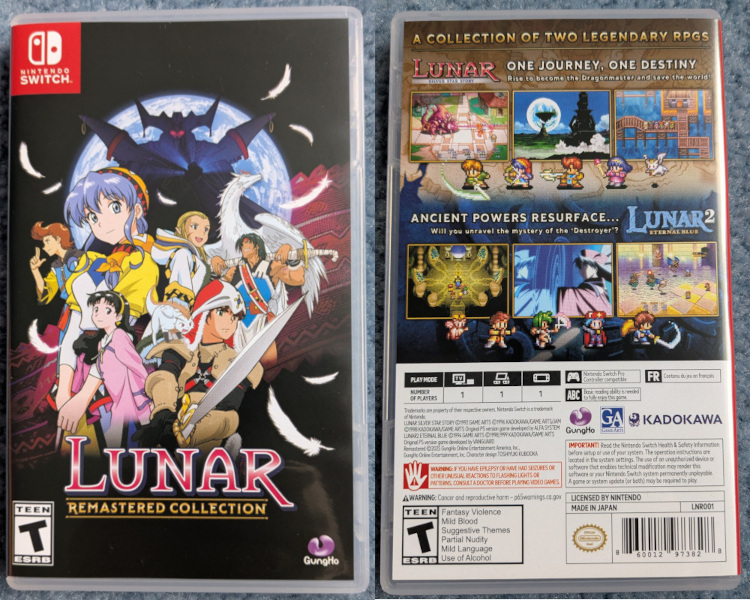

One of my favorite PS1 games and games in general (I do not play much video games), Lunar the Silver Star and Lunar Eternal Blue, on the Switch. Luckily the game I preordered came just before I moved to another city for my internship. Great game, I hope they make a remake of Mana Khemia Student of Al-Revis. It seems like they made a remake of Final Fantasy Tatics recently :)

This is the game that got me into JRPG, I loved the dialogue and the storyline a lot. What is great about Switch games is that you can change the language easily so this was my first game all in French.

My Pokémon Gameboy Color collection. Most of the games were a Highschool birthday gift from my father. I grew up with Pokémon Yellow and Silver which explains why I have two copies of them. Pokémon Crystal is in Japanese so I never bothered playing it. Growing up, my Dad would periodically mail to my brother and I Japanese magazines and electronic kits. Though that never motived any of us to learn Japanese. Though I think my brother did learn a bit during the pandemic but stopped when he started his Masters. (Not from a Japanese family to dispell any potential misunderstanding).

Also featured in the photo is my Pikachu pencil holder (the one in front of my tamagotchi) and some random Maplestory tokens that I am not sure what they are for. There are also some Hanafuda cards that you may have seen in Summer Wars. I forgot how to play the game. My grandmother would often play with Hanafuda cards alone, not sure what game it was. There is also a Digivice that I used to play with, I think it originally belonged to my sister. In kindergarten, I had the OG digivice but I have very little recollection of it aside from recalling of its existence. Too bad I no longer have my Golden Burger King Pokémon Cards, my mother gave it to some kid as punishment for not cleaning my room along with a bunch of my other toys. There is also Laputa Poker Cards in the background if you were wondering what anime that is from. Funny enough, I don’t think I ever watched Laputa in English just like how I never watched Totoro in English (no subtitles either) so I probably never understood the story properly.

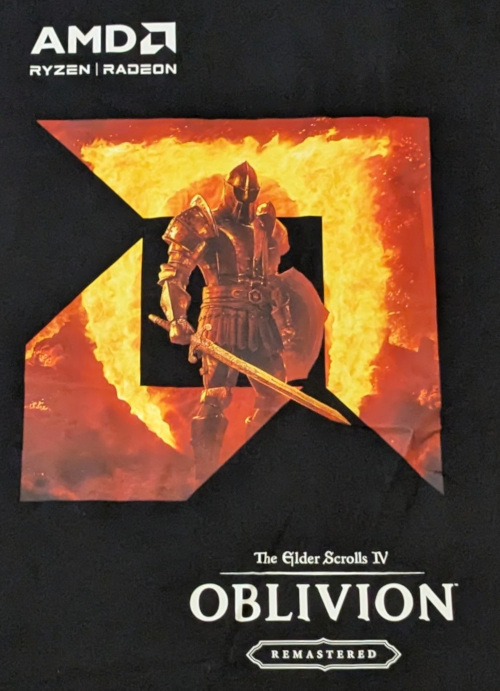

An Elder Scroll t-shirt I won at one of the places I worked at. Unfortunately it’s 2XL, way too large for me. I have a knack winning t-shirts way too large for me at company events including a Raptors in 2019, the year the Raptors won the NBA Championship. This will probably end up as a gift or a cushion for my fragile items. On that day, I learned that Skyrim is part of Elder Scrolls. I am ignorant when it comes to video games as I don’t play them often.

A sticker I received on AMD’s 40th anniversary in Canada. It’s actually the 40th anniversary of ATI’s founding, a Canadian semiconductor company that specialized in developing GPUs which AMD bought. That is how AMD entered the GPU market. The Canadian office does a lot of CPU and GPU related design but I don’t think most Canadians know this.

An Ericsson branded car roaming around the community near their office. Ericsson is a Swedish company that is currently dominating the 5G market (if we disclude Huawei). Nokia and Huawei is within 10 mins walking distance from the Ericsson building I used to intern at. Canada used to be the best in Telecommunications with the likes of Nortel and Blackberry dominating the telecommunication and handphone market. Though poor management decisions caused the two to fall … Blackberry still exists but they pivoted markets. As for Nortel, as much as some may say Huawei stole their IP, it was ultimately mismanagement that brought down Nortel, and not from Chinese espion. Fun fact, Nortel HQ became the HQ for the Department of National Defense and it took the military some time removing all the “bugs” from the building. Another timbit, Nortel execs knew of Chinese espionage but did not care at all.

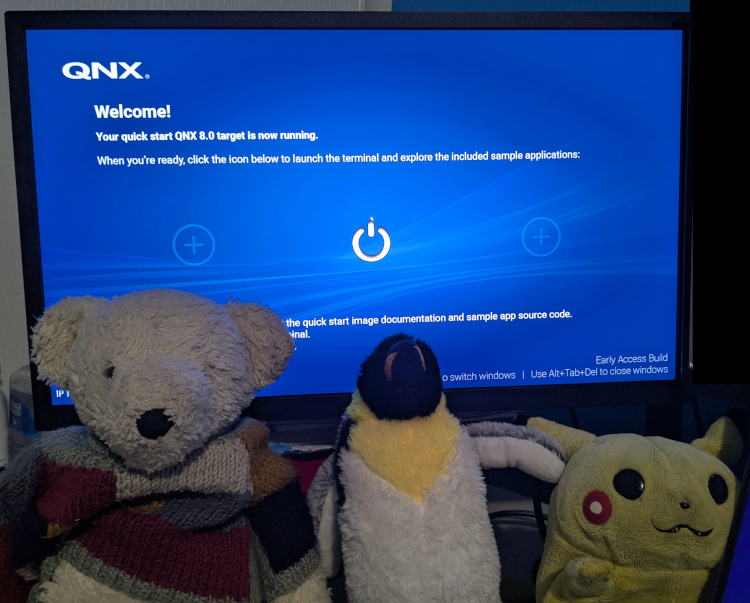

Continuing on the theme of companies I formerly interned at, here is QNX 8.0 running on a Raspberry PI. QNX Is a Real-time Operating System (RTOS) that was started in my “neighborhood” (not sure what to call it as it’s no longer considered a city but a suburb of a larger city). It’s primarily used in cars but it can be used in any safety critical devices such as in medical devices and in rockets (though I am not entirely sure of how widely adopted it is within the space industry). It’s one of the three widely-used Microkernels (from what I know), the other being Minix installed in every Intel chip and Apple. Apple has adopted some variant of L4 Microkernel OS for their ARM secure enclave and it also appears that SeL4 is used in many places as well so my statement about QNX may be false. There’s also WindRiver’s VxWorks. I never looked at the figures so my claim about QNX being one of the 3 most-used Microkernels could be entirely false.

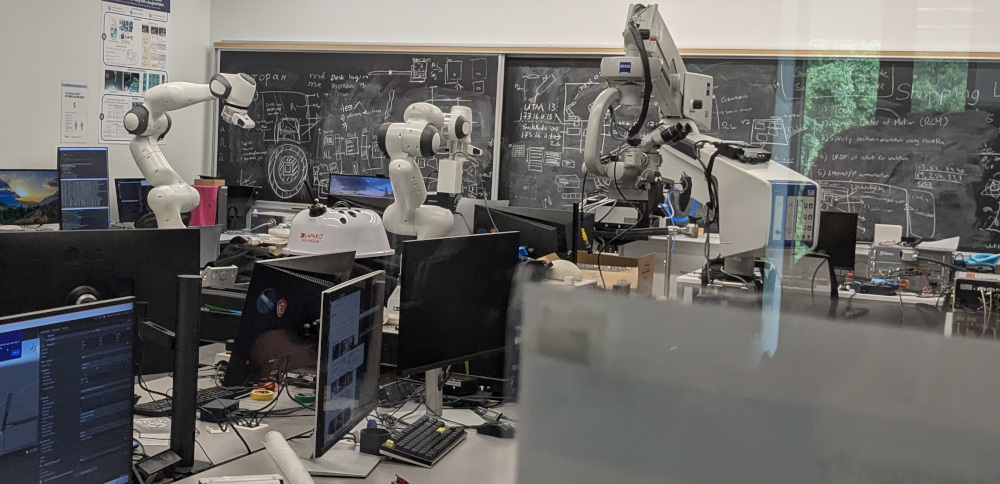

Now that I moved closer to the city where I did my studies in Computer Science years ago, I decided to take a tour and see what has changed. It seems like there are a lot more robotic arms now. In my final year, I was one of the first students to take robotics in the department of Computer Science (officially called the Department of Math, Statistics, and Computer Science), and we had no robotic arms for use. It was all simulation but that might have been for the best. I definitely would have broken an arm or two with my terrible code and calculations.

Not sure what the trend was but when I visited my alma mater in the spring, the Computer Science profs have been posting Pokémon cards on their doors with cute name-tags.

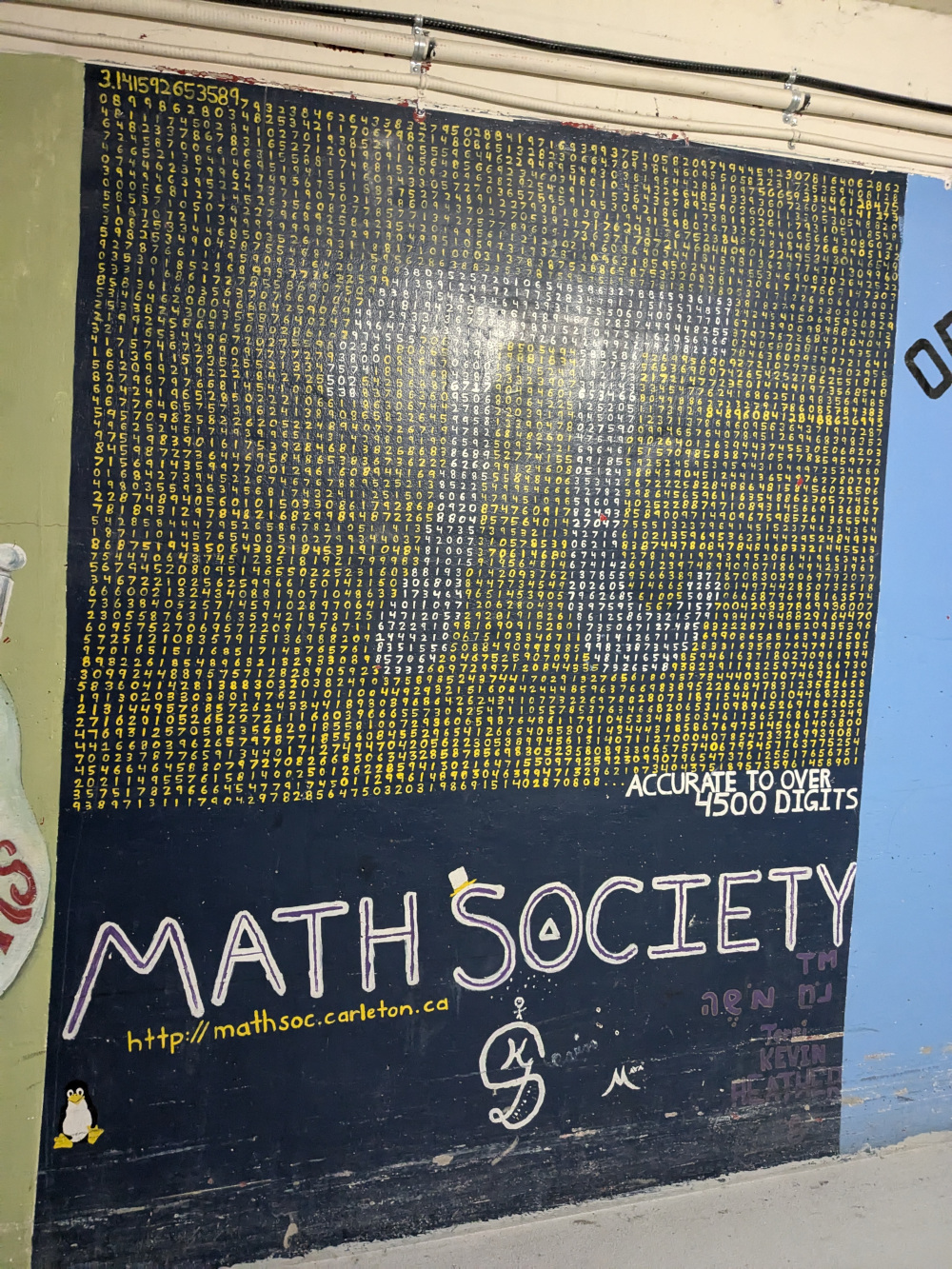

At my current university, all the buildings are connected by tunnels, allowing students to avoid the snow during Winter. This is the old Math Society’s mural in the tunnel proudly featuring Tux the Linux Penguin. There is now a new Math Society mural but it looks like some ugly jail cell. My friends and I are clearly not artists …. Unfortunately, I don’t have photos of the new mural but perhaps I will take a picture when I return back to finish my final year of undergrad (hopefully). Trust me, you will be disappointed if you were to see the new mural. The new mural does not have Tux the Linux Penguin unfortunately due to my lack of skills. I was notified that if I don’t visit the university before I move, there would be no reference to Linux in the new mural. Therefore, I had to come and write “LINUX” in large font to overpower all the random math equations on the wall.

p.s. I ain’t going to verify if the first 4500 digits of PI was actually written correctly but feel free to verify yourself.

A friend doing graduate studies in Linguistics recently went to Japan in the summer and mailed me this postcard from there. I guess he was in Japan for a while. I got to know him from my Mathematics courses as he was originally a Mathematics student who had a well-diverse interest in science, music and languages.

While I rarely eat out due to costs, I found an anime styled drawing at McDonald’s kiosk which was surprising.

How Linux Executes Executable Scripts

November 8, 2025

One is traditionally taught that when running an executable (a file with execute permission), the shell will fork() itself and have the child process replace itself using execve().

One winter a few years back, I random question popped in my head: Who determines whether a file is a script or an executable and where in the code does this logic lie in?

Recall to invoke a script or any non-ELF executables such as Python and Bash script, one needs to specify the path to the interpreter using the shebang directive (#!) such as

#!/usr/bin/bash

or

#!/usr/bin/python

I was unsure whether the responsability of executing the script properly was the job of the terminal (bash, sh, csh, etc) or the kernel. I highly suspected it was the role of the

kernel and randomly I came across an article How does Linux start a process that answers this question. In short, when one calls execve,

From there into

search_binary_handler()where the Kernel checks if the binary is ELF, a shebang (#!) or any other type registered via the binfmt-misc module.Excerpt from How does Linux start a process

/*

* cycle the list of binary formats handler, until one recognizes the image

*/

static int search_binary_handler(struct linux_binprm *bprm)

{

// ...

list_for_each_entry(fmt, &formats, lh) { //iterate through all registered binary format handlers

if (!try_module_get(fmt->module))

continue;

retval = fmt->load_binary(bprm); // attempt to load executable as the current format

// ...

if (bprm->point_of_no_return || (retval != -ENOEXEC)) { //format recognized so stop searching

read_unlock(&binfmt_lock);

return retval;

}

The kernel will call search_binary_handler() to determine the type the binary (executable) by iterating through all registered formats

which includes (not in order):

- ELF -

binfmt_elf - Scripts (

#!) -binfmt_script - Misc -

binfmt_misc: Linux (kernel) allows one to register a custom format by providing a magic number or a filename extension (see Kernel Support for miscellaneous Binary Formats)

Each binary format fmt (struct linux_binfmt has a function pointer load_binary used to load the binary. This is the function the

kernel uses to help identify the binary type as this function will return -ENOEXEC if the binary is not of its type.

/*

* This structure defines the functions that are used to load the binary formats that

* linux accepts.

*/

struct linux_binfmt {

struct list_head lh;

struct module *module;

int (*load_binary)(struct linux_binprm *);

int (*load_shlib)(struct file *);

};

For scripts, the loader can be found in fs/binfmt_script.c a function load_script:

static int load_script(struct linux_binprm *bprm)

{

const char *i_name, *i_sep, *i_arg, *i_end, *buf_end;

struct file *file;

int retval;

/* Not ours to exec if we don't start with "#!". */

if ((bprm->buf[0] != '#') || (bprm->buf[1] != '!'))

return -ENOEXEC;

/*

* This section handles parsing the #! line into separate

* interpreter path and argument strings. We must be careful

* because bprm->buf is not yet guaranteed to be NUL-terminated

* (though the buffer will have trailing NUL padding when the

* file size was smaller than the buffer size).

*

* .... truncated ....

*/

// parsing logic

bprm->interpreter = file;

return 0;

Things to Look At Next

- Wonder about how Linux handles ELF binaries, specifically how it handles static and shared binaries? Take a look at How does Linux start a process

- fork() can fail: this is important

- TODO: Investigate why a script without shebang fails on

strace ./test- use

bpftrace:sudo bpftrace -e 'kprobe:load_script { printf("load_script called by %s\n", comm); }' - find other trace events to look at to distinguish between the two cases like exec

- use

- Edit (Nov 10): Someone posted today on Hacker News Today I Learned: Binfmt_misc which goes over

binfmt_miscfrom a security perspective- Links to ON BINFMT_MISC, a tutorial on how to register a new binary format

Hangul - Unicode Visualiser

October 31, 2025

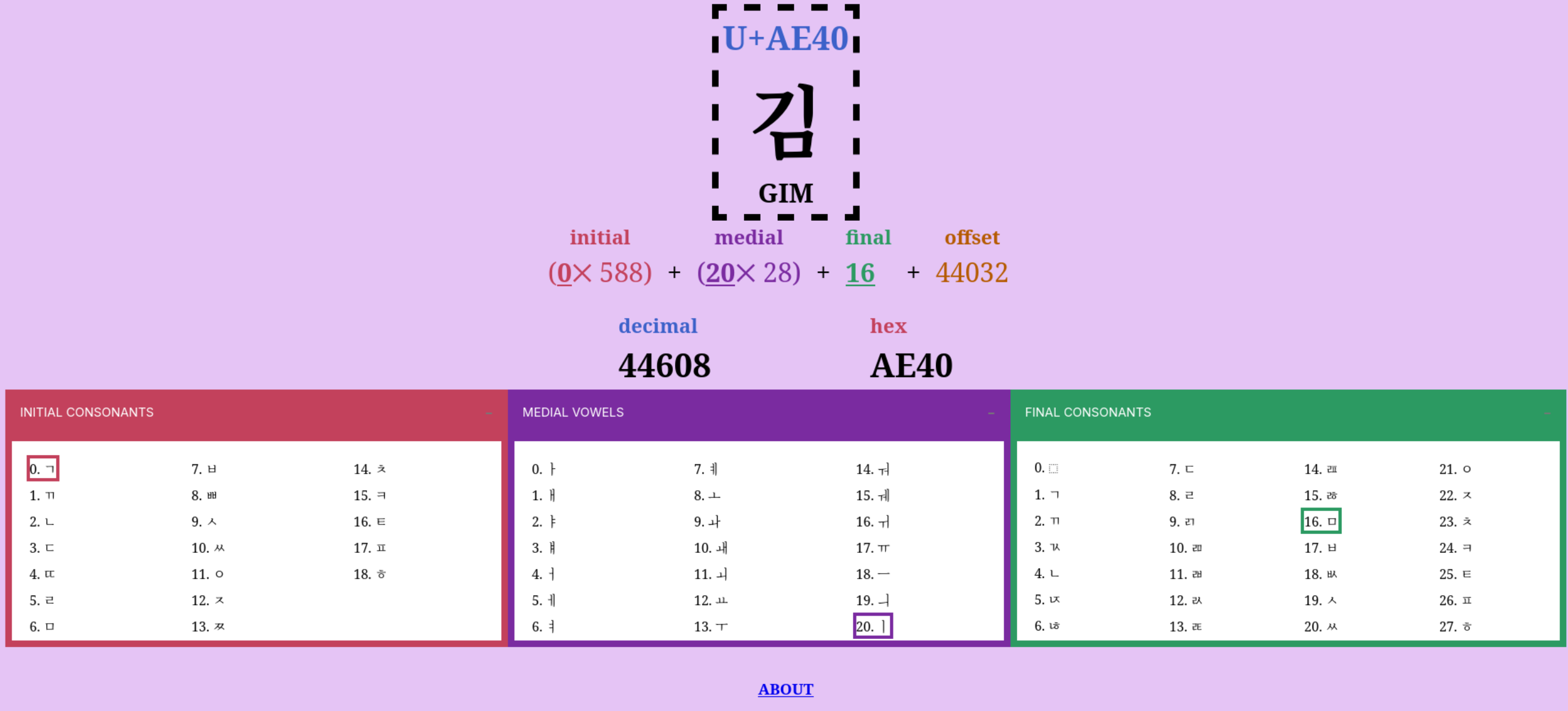

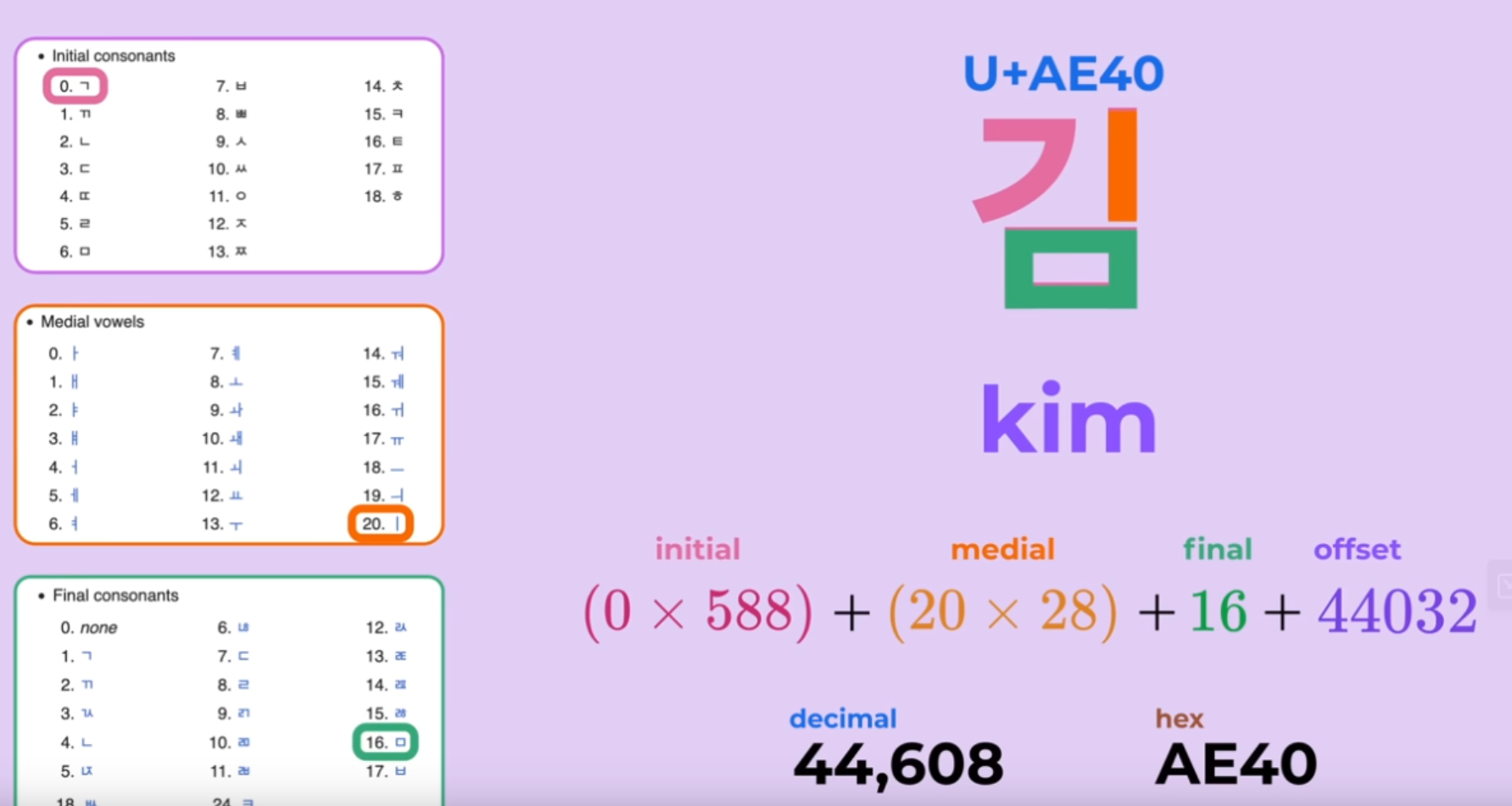

Inspired by the best video I have encountered on Unicode, I was inspired to create a visualiser of how unicode represents Hangul, the Korean writing system, that was covered in the video.

Link to visualiser: https://zakuarbor.codeberg.page/unicode/hangul/

It is fascinating how one can represent all 11,172 possible combination so elegantly. The visualiser allows one to determine the codepoint of any Korean syllabic blocks. For those unfamiliar with the language, Korean is made up of 19 consonants (자음) and 21 vowels (모음) though some sources may say there are 24 basic letters (Jamo). The number does differ depending on how you count the letters but we can agree that there the language has 51 Jamo (there are 24 basic letters and from these you could form more complex consonants and vowels). To form a complete a syllable block (a word is made up of one or more of these blocks), there must be at least one consonant and one vowel that is attached to each other. A syllable block is made up of 3 components:

- An Initial constonant: 19 possible consonants

- Medial vowel: 21 possible vowels

- Final consontants (optional): 27 potential consonants to choose from including consonant clusters if one chooses to include one (though for the formula to work out, we have 28 options, option 0 being a “filler” (i.e. nothing)

Wikipedia has a great entry on how the letters are placed within a block. The purpose of the analyser is to visualise how Unicode computes the correct codepoint. There is actually no need to understand how the language works to understand how unicode is able to compute the codepoint associated with the block.

Random but here’s a great UTF-8 playground I encountered. I probably should update my visualiser/playground to include UTF-8 encoded representation.

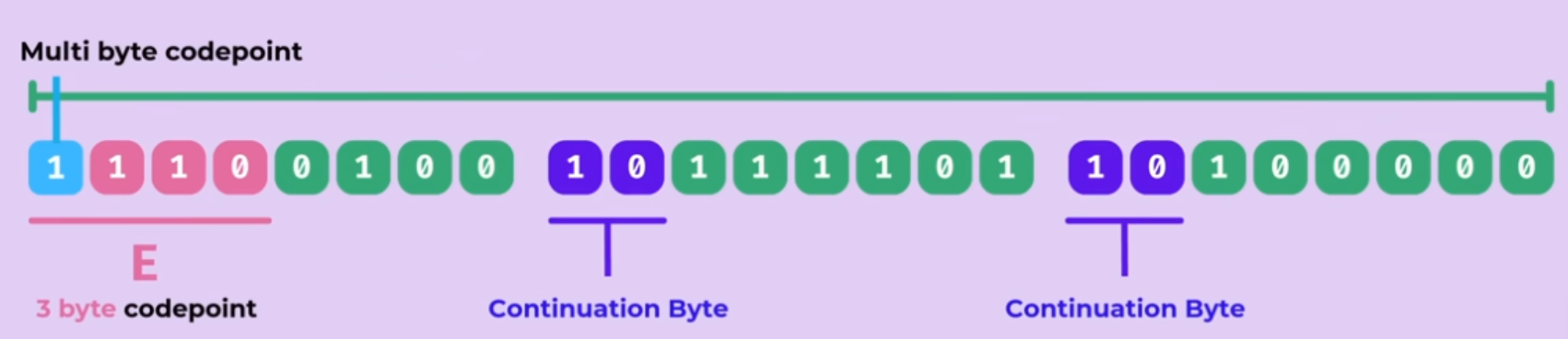

UTF-8 Explained Simply - The Best Video on UTF-8

October 6, 2025

Video: UTF-8, Explained Simply

Channel: @nicbarkeragain (Nic Barker)

This is the best explanation I have found on UTF-8 thus far. I previously said UTF-8 is Brilliant was the cleanest explanation I’ve seen on this subject, well that was shortly beaten on October 2 2025. There are a few reasons why I love this video:

- Builds up the need for unicode via history and how the 8th bit on ASCII could be used as parity bit

- Interoperability

- How Unicode-8 is backward compatible - old ASCII format works with new decoder

- a brief history of how UTF-16 came to existence

- How Unicode-8 is also forward-compatible - UTF-8 remains compatible with existing ASCII decoder

- Self-synchronization problem in Variable-width encoding bytecode - In the event of a data corruption, how do we know whether we are on the beginning or somewhere in the middle of a byte

- a question of how to identify the leading byte if dropped in a random chunk of data

- How to determine the first byte of a 2, 3, 4-byte code unit sequence

- How to avoid potential conflicts of codepoints existing in different byte sequence (only the shortest representation of a codepoint is used)

- Zero-width joiner to combine emojis

- How UTF-8 represents Korean - How UTF-8 allows you to construct and edit each block efficiently through Math

I definitely should edit my blog on character encoding as there are probably some areas that are quite questionable after going through various articles and videos on unicode over the year.

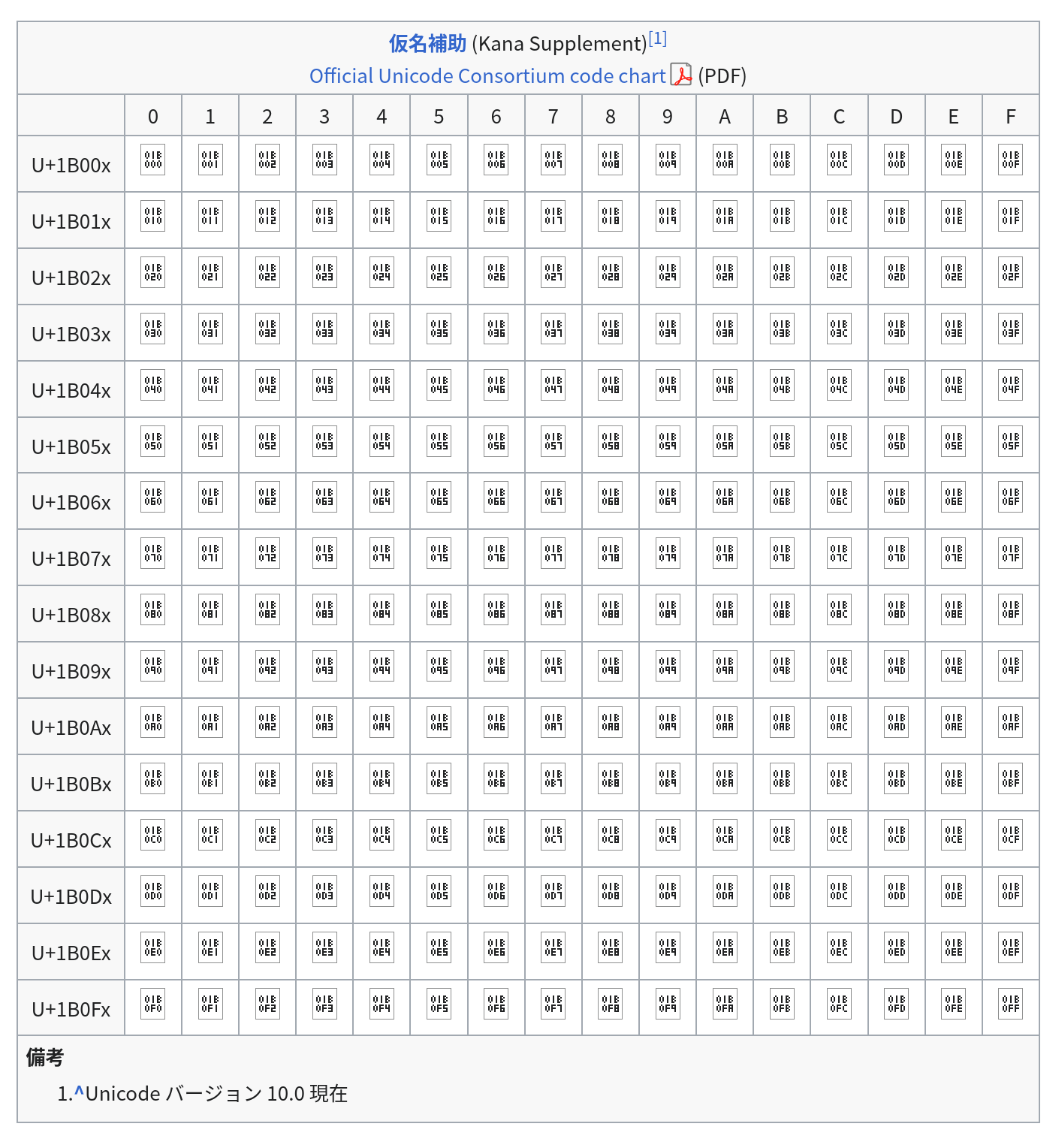

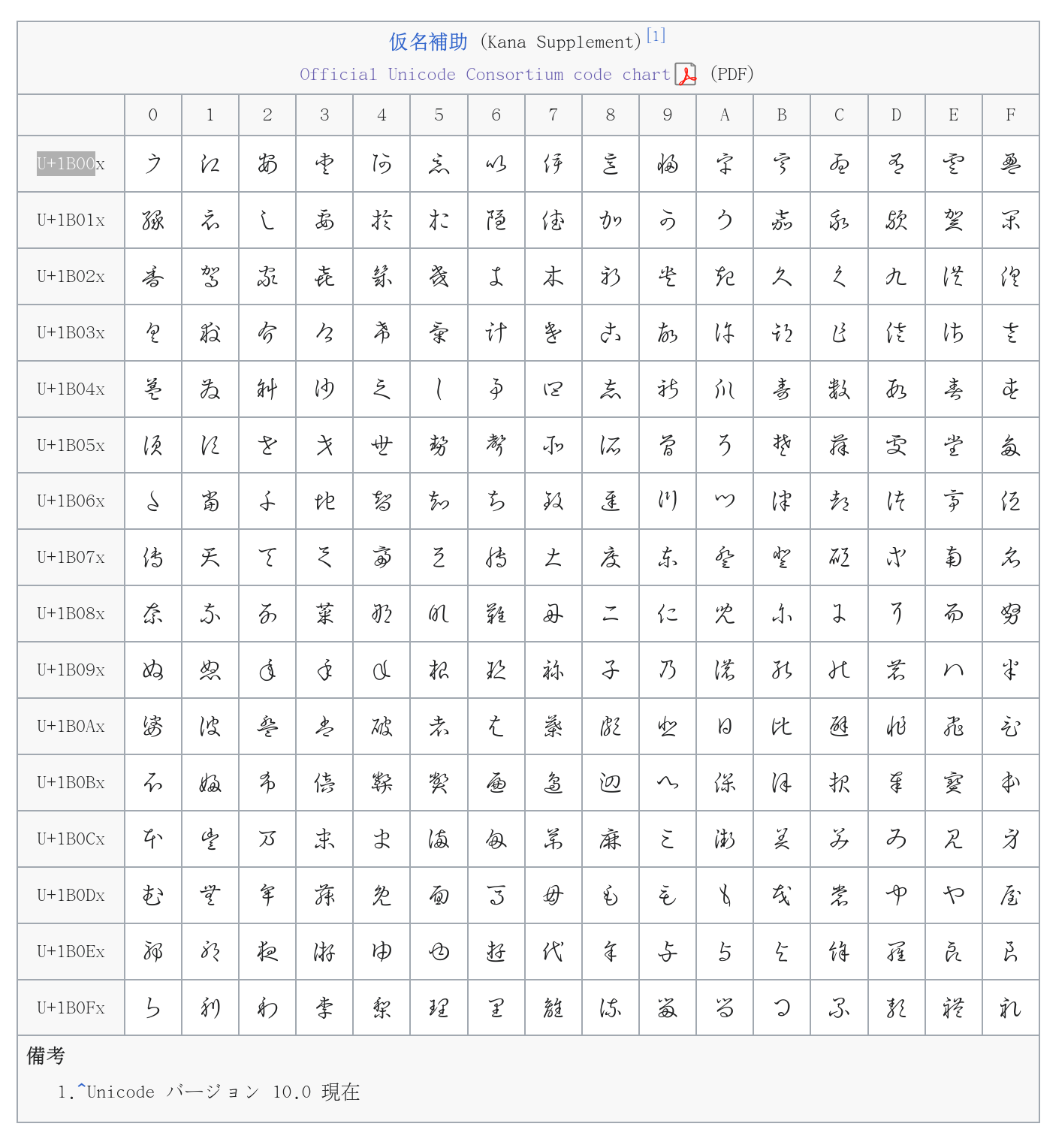

Render Archaic Hirigana

October 6, 2025

What does does U+1B002 look like on your screen? 𛀂

Did it look like some some unicode box inscribing the unicode value? That is because your system lacks the typefont to render the unicode.

If you visit the following wikipedia page on 変体仮名, you’ll likely notice the following:

Instead of:

To resolve this, essentially install the true type font *.ttf file from BabelStone

Credits to the following article as it outlines much clearly how to install the font:

A Beginner’s Guide to Inputting Historical Kana: Installing a Font for “Hentaigana”.

I instead went through the harder route before reading this article by installing the font under ~/.local/share/fonts and running fc-cache -fv to forcibly reload the font.

Turns out you can install the GUI similarly to what the article proposes for MacOS by opening the file on Linux (double-tap) and an option to install the font will appear:

Refresh the page and you should now see 𛀂

Note: For some reason Firefox on Private-Mode is unable to render the archaic Hirigana, not sure why

Migrating Away Github: One Small Step To Migrate Away From US BigTech

October 3, 2025

As title suggests, I no longer actively use Github as my personal Git hosting service. This does not mean I have abandoned Github entirely as I still visit the site and use it regularly for work.

For a while, the code for this site, my blog, and my personal side projects have been hosted on Codeberg, a non-profit German organization that hosts their servers in Europe. Similar to my migration from Wordpress to Github, none of my content have been deleted but rather has been archived for data preservation and to prevent link rot. I originally discovered and created my Codeberg account when trying to replicate performance claim made by some in the Linux French forums.

This move does not imply any hatred or dislike of Github as a platform as it has served me well over the year. Rather, it reflects my gradual and slow effort to reduce reliance on Big Tech, especially those operated by large US companies. As the 2nd part of the title suggests, I have been wanting to DeGoogle and big tech in general for years. However, it has been hard to justify the move as I honestly don’t have too much qualms providing data to these giants. This seems very contradictory to others as I have made this argument a lot to push others to use Linux or any other open source POSIX-compliant OS (e.g. BSD). Though you should seriously consider leaving Windows if you have not have done so, it’s a bloated privacy-invading OS. I believe in the Right to privacy which Big Tech does not provide at all.

Due to the recent statements and actions coming from the United States, I have made the decision to start the migration to gain more independence from US tech and start searching for more open alternatives. I am still a slave to Google, relying on their entire ecosystem such as Google Playstore, Google Search, Google Drive, Gmail, Maps, Google Auth, Sign In via Google and Youtube. To minimize disruption and pain, I will be making a slow transition starting with replacing services and software that are the easiest to replace which happened to be Github. Currently, I am slowly transitioning my email to ProtonMail, migrating the most essential services first such as Banking. I do plan to keep my original email to remain in contact with anyone from the past and to tie any my insecure or data-invading services to Gmail, effectively creating a two-tier email system.

I do believe it is important to remain pragmatic when moving away from US tech. It is not realisitic to completely cutt off entirely from US tech given their dominant position in the market. After all, I earn my living from US companies who frankly create awesome products and do a lot of R&D that benefits society as a whole.

In other words, it is important to have a balance and identify which services can be replaced the easiest and which pose the greatest risk if access were suddenly restricted for injust reason. As part of the effort, I have made an offline backup of my data on Google Drive (though whether the archives contain any corruption, time will tell).

I still have not identified the next platform to replace but here are some ideas:

Authenticator - I need cloud sync. Losing my phone on the bus nearly locked me out of all my accounts which cloud backup to my tablet saved me- I carry a Yubikey around with me but not every service supports this yet

- Edit (Nov 9): - I have made the full transition to ProtonAuth

- Office Suite - A cloud office suite such as Google Drive are hard to give up due to its ease of sharing and collaboration tools. The most realistic path is to self-host.

- ProtonDrive nor does La Suite, French Government Suite, have replacement for Presentation and Spreadsheet at the time of writing

- Collabora and OnlyOffice seems to be the only alternatives

- Phone - I currently use a Google Pixel 6a which has served me well till the summer when Google released an update that reduces battery capacity

I am hesitant to move to GrapheneOS since I am not sure if it supports my workplace’s authentication apps- Updates(Nov 9): I no longer use my personal phone to authenticate workplace applications, security didn’t like the fact I was using a third-party VPN and that I didn’t install workplace monitoring services (now using Okta Desktop to authenticate to avoid installing spyware on my phone)

Fairphone 6 would be ideal but is not available in my region so for now Samsung appears to be the only practical alternative though I don’t plan to replace my phone until reaches end-of-life as I have always done with my previous phones- Edit (Nov 9): Apparently Fairphone 6 is region-locked and will not work in North America

- Cloud Provider - At the moment, I don’t maintain any cloud instances since I no longer have a need for it (I previously used DigitalOcean and free-tier Oracle cloud).

I no longer have any cloud instances with any provider currently as I no longer have any use for them (I used Digital Oceans previously)

- OVHCloud - French cloud service whom I found out about via an article from the Register where Microsoft could not guarantee data sovereignty. However it does not seem they have any ROCm enabled GPUs which may pose an issue if I wanted to do any ROCm related work in the future (though I guess it would make more sense to use CUDA or write HIP programs)

- Search Engine: Google has been great to me thus far. I know others complained its search results have gotten worse over the years but it has been far better than other search engines I have used in the past such as Yahoo, DuckDuckGo, Brave Search and Ecosia. I heard Kagi is a good alternative, I should try the free version and see for myself before I make any commitment.

Binary Dump via GDB

October 2, 2025

Recently at work, I’ve been relying on a very handy tool on GDB called dump memory to dump the contents of any contiguous range of memory.

I first came aware of this tool from a stackoverflow answer

that a coworker linked as each of us had a need to dump the contents of memory copied from the GPU to the host memory for our respective tickets.

Syntax: dump memory <filename> <starting address> <ending address>

For instance,

dump memory vectorData.bin 0x7ffff2d96010 0x7ffff53bba10

or suppose you have a vector vec, you could dump the contents of the vector via:

dump memory vectorData.bin vec.data() vec.data()+vec.size()

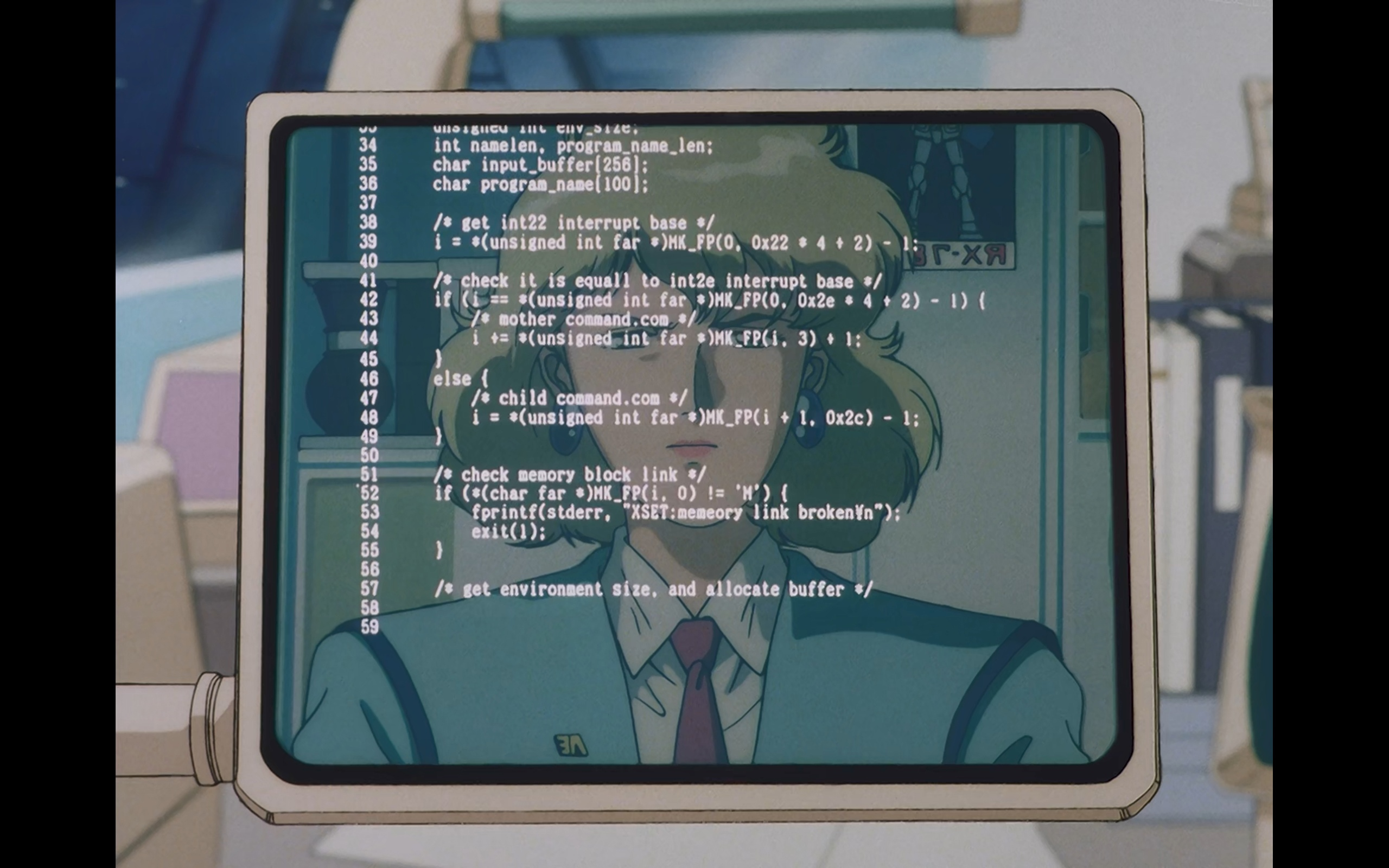

Gundam With Decent Portrayal of Code

August 27, 2025

Years ago I encountered a Reddit post asking us redditors to rate a piece of code shown in Mobile Suit Gundam 0083: Stardust Memory. This led to an interesting discussion and analysis of the code from a few redditors, particularly the analysis from gralamin who disected the code revealing a few interesting aspects of the code such as the potential architecture, OS, and purpose of the code.

Based on the release date of the anime along with the provided code, we can infer the following:

- Code is written for x86-16-bit DOS based on

- the use of far pointers

- reference to the DOS Command-line interpreter COMMAND.COM, the precursor of cmd.exe

- There is some IPC (Inter-process communication) going on whereby there is a parent process (named mother) and a child process

- NOTE: COMMAND.COM runs programs in DOS

- DOS system was set for Japanese locale using JIS X 0201 as their characterset

When I initially saw the post 3 years ago, I initially thought broken¥n was a typo seeing how there was a few spelling mistake in the document such as equall and memeory. However,

as gralamin alluded:

I’m not sure what is up with the yen n. Maybe at the time \ was mapped to yen on japanese machines, in which case this would be a new line.

After spending hours reading up on character encoding due to a request from a friend studying Linguistics, I now can confirm his speculation was indeed correct.

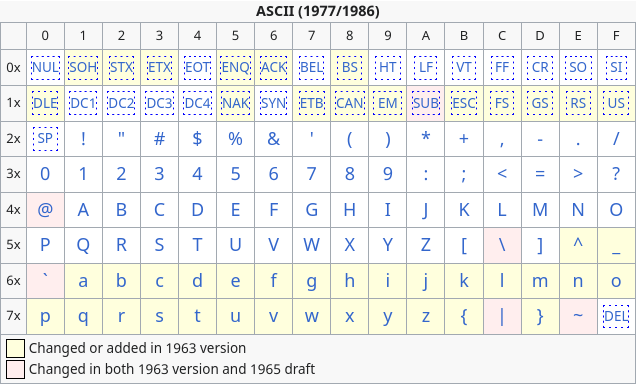

From the ASCII table, we can see that 0x5C maps to backslash \n

ASCII Table. Extracted from Wikipedia

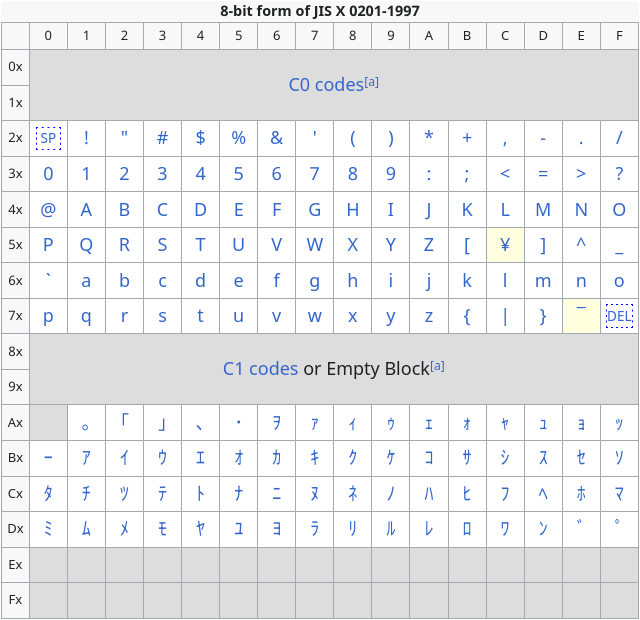

JIS X 0201 can be seen as an extension of ASCII where the upper unused bits were repurposed to contain Katakana characters and a few other things. However there are some slight differences as highlighted in yellow:

JIS X 0201 Table. Extracted from Wikipedia

As one can notice 0x5C no longer maps to baskslash \n anymore but rather to the Japanese Yen ¥. This makes no difference to the compiler’s perspective as from its perspective

as \ and ¥ has the same value. Wikipedia has a good comment about this effect:

The substitution of the yen symbol for backslash can make paths on DOS and Windows-based computers with Japanese support display strangely, like “C:¥Program Files¥”, for example.[14] Another similar problem is C programming language’s control characters of string literals, like printf(“Hello, world.¥n”);.

Whether intentional or not by the artist, this rendering shows some realism in the show (let’s ignore the fact the female lead is not Japanese).

Incorrect Translation of a Math Problem in a Manga

July 24, 2025

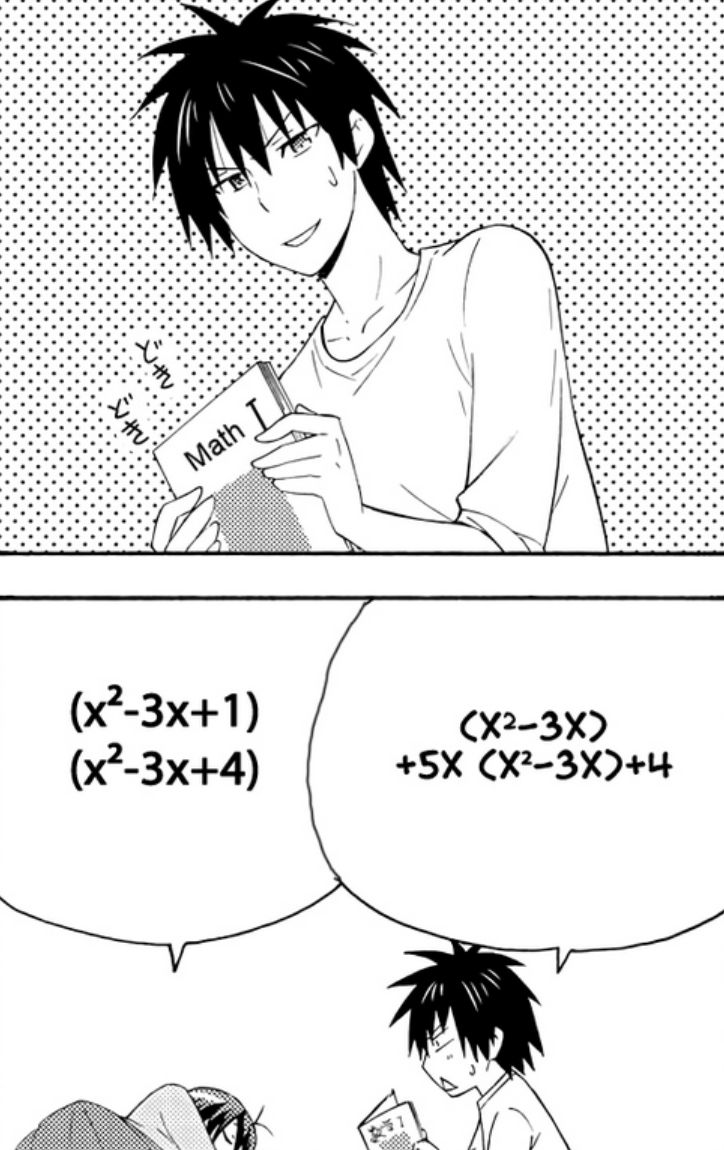

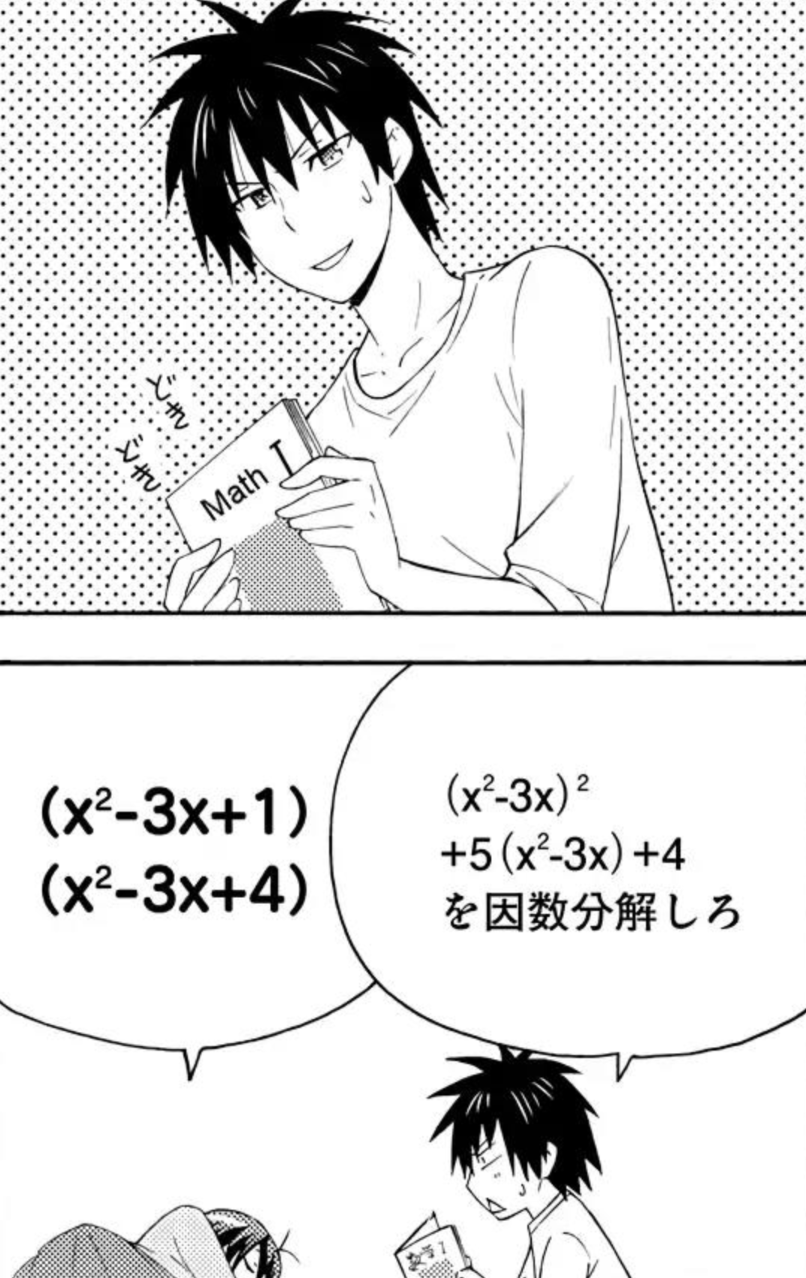

Recently I have been slowly reading through Danchigai, a comedy and slice of life manga in French. Whenever there is a math or code displayed in anime or manga, I sometimes have the temptation to analyze the problem. In chapter 27, the following problem was presented:

It is immediately obvious that there is a mistake during the fan-made scanlation of the manga from Japanese to French. In the original question, $(x^2-3x)+5x(x^2-4x)+4$, the highest order (degree) is 3 but the reported answer has the order of 4.

So I decided to take a look at the fan-made English scanlation and notice that the scanlator left the question in its original form:

With the original question unmodified from the Japanese source, we can now verify the answer:

\[\begin{align*} (x^2-3x)^2 + 5(x^2-3x) + 4 &= u^2 + 5u + 4 \quad \text{, } u = x^2-3x \\ &= (u+1)(u+4) \\ &= (x^2-3x+1)(x^2-3x+4) \quad \text{ as desired} \end{align*}\]Rational Inequality - Consider if x is negative

July 23, 2025

Someone posted on a group chat a snippet from his course notes:

i.e., it is WRONG if you try to do: $\frac{1}{x} \ge 2 \iff 1 \ge 2x$

and it was not clear to them as to why that is.

This seems like an innocent attempt to solve the inequality and it is not entirely incorrect to do so. But when working with rational inequality, one needs to consider the signedness of x (i.e. is x negative or positive). The inequality could change depending on whether x is positive or negative.

To give a clearer picture, take a look at the graph of $\frac{1}{x}$ and notice how the function behaves differently when x is before or after the vertical asymptote.

Here’s my crack at the problem, hopefully I didn’t mess up:

if $x> 0:$

\[\begin{align*} \frac{1}{x} &\ge 2 \\ \iff 1 &\ge 2x \\ \iff x &\le \frac{1}{2} \end{align*}\]if x = 0: Not possible (DNE)

if $x < 0$:

\[\begin{align*} \frac{1}{x} &\ge 2 \\ \implies \frac{1}{(-x')} &\ge 2, \quad x' > 0 \text{ & } x=-x' \\ \implies -1 &\ge 2x' \\ \implies \frac{-1}{2} &\ge x' \end{align*}\]Recall $x’> 0$, but we found $x’\le \frac{-1}{2} < 0$ . This is a contradiction and therefore $x’ \not \lt 0$

We have the following:

-

$x \lt \frac{1}{2}$

-

$x\ne 0$

-

$x \not \lt 0$

$\therefore x \in (0, \frac{1}{2}]$

The Issue With Default in Switch Statements with Enums

July 4, 2025

Reading the coding standards at a company I recently joined revealed to me the issue with default label within the switch statement and why it’s prohibitted when its being

used to enumerate through an enum. default label is convenient to handle any edge cases and it’s often used to handle errors. However, when working with enums, it is often

the case that the prpogrammer intends to handle all possible values in the enum and would turn on -Wswitch or -Werror=switch.

Let’s suppose I have an enum to represent the different suite in a deck of cards:

enum Suit {

Diamonds,

Hearts,

Clubs,

Spades

};

and my break statement looks like the following:

switch(suit) {

case Diamonds:

printf("Diamonds\n");

break;

case Hearts:

printf("Hearts\n");

break;

case Clubs:

printf("Clubs\n");

break;

}

}

The code above is missing the Spades suite so if we were to compile with -Wswitch:

$ LC_MESSAGES=C gcc -Wswitch /tmp/test.c

/tmp/test.c: In function ‘main’:

/tmp/test.c:12:3: warning: enumeration value ‘Spades’ not handled in switch [-Wswitch]

12 | switch(suit) {

| ^~~~~~

Note: LC_MESSAGES=C is just to instruct GCC to default to traditional C English language behavior since my system is in French

But if we were to add the default case:

switch(suit) {

case Diamonds:

printf("Diamonds\n");

break;

case Hearts:

printf("Hearts\n");

break;

case Clubs:

printf("Clubs\n");

break;

default:

}

Then we will no longer see the error

$ LC_MESSAGES=C gcc -Wswitch /tmp/test.c

$

However, we can get around this issue by being more specific with our warning flag using -Wswitch-enum:

$ LC_MESSAGES=C gcc -Wswitch-enum /tmp/test.c

/tmp/test.c: In function ‘main’:

/tmp/test.c:12:3: warning: enumeration value ‘Spades’ not handled in switch [-Wswitch-enum]

12 | switch(suit) {

| ^~~~~~

Note that -Wall won’t catch this error:

$ LC_MESSAGES=C gcc -Wall /tmp/test.c

$

2025 Update

May 24, 2025

Website

Since the last last update when I decided to revive my neocities website, there has been a lot more activities. Unfortunately, due to low motivation and laziness, I have not been able to finish a number of blogs and microblogs … There are definitely a number of topics I want to explore but I am in a slump and need to make some changes in how I manage my time … to be more productive. I’ve been reading less blogs and articles so the LinkBlog is starting to look bare …

Academic Studies

Previously I mention that I lost a lot of my initial interest in the domain after the 3rd year of my studies and therefore decided to take a year-long break from my studies to pursue an internship in telecom. The experience was … boring … I loved having lunches and taking walks with the team but the work was dull.

Originally I was supposed to find a summer job before returning to school in the fall but due to my impatience, I ended up signing a 16 months contract with an enterprise who designs CPUs and GPUs … so I am placing my education on hold for another year …

To give more context, most of the summer jobs are posted in the winter. However, I started my job search in the fall where most of the long term interships are available. After being rejected from what I thought would be a decent chance to get into the government for the summer (I’ve interviewed with them in the past and did very well but this time, they rejected me on the first stage of the interview), I ended up applying to any jobs that sounded remotely interesting regardless of their lengths of work. That is how I ended up moving around 400km away from my hometown and also delaying my graduation. It is getting scary because it’s been so long since I’ve done any serious Mathematics. To those who studied Engineering, the Math you do is vastly different from the Math I take, I know this as a student who had to start from year 1 despite having completed a degree in Computer Science which is way closer to Math than Engineering is to Math. It does depend on your country and institution, but there is a huge difference between the Math for future Mathematicians-wannabes and everyone else. It is a bit weird to explain but in Canada, the Math major is split into two categories:

- Math for those wishing to go to graduate school in Mathematics or do serious Math

- Math for those who wish to study Mathematics but not necessary pursue higher education in the field

In the former, students are exposed to some concepts of real analysis in their first year and is very theoretical and proof heavy. There’s little computation in the program. In the latter, students are exposed to proofs from a course on Mathematical proofs but it is identical to what Computer Science students had to take at my alma mater. The program is more computational heavy and resembles the Math courses that CS and Engineers take but with a bit more emphasis on theory.

Meeting my friends before my move and seeing them graduate and starting graduate school made me miss Math a bit. Although I did lose a lot of my initial interest in the subject, I do still want to look into the subject a bit more. Perhaps I’ll review some stuff from linear algebra (you should definitely read Linear Algebra Done Right if you are interested in the theory aspect), read some entertaining Math books (i.e. Math Girls series), and look into Mathematics for Machine Learning or Robotics during my spare time.

What I’m Doing Right Now

As I mentioned, I’ll be working for another year at a company in their GPU division. I’m not doing anything exciting but it’s definitely way more exciting than my previous internship. I’ve been having fun reading their new test and verification framework and learning the internals of their GPUs including the hardware architecture and assembly, something I’m not too familiar with and outside of my expertise. Though, I only need to know at a high level of the various components of the GPUs and writing basic shaders in assembly for my role.

It has been exactly a year since I’ve seriously started to study French and I sure did overestimate my knowledge of the French grammar … While I learned a lot, my progress in the language has not been smooth. Considering languages is my weakest subject, my progress has been acceptable. For context, despite only speaking English fluently, I struggled to learn a single language which greatly impacted my academic performance. I actually have been provided a translator before when I was a kid, and I’ve been asked if I was international due to my poor English in Highschool.

The French language can be broken down into 3 categories or 6 levels if we follow CEFR (Common European Framework of Reference). I stupidly chickened out of A2 (advanced beginner) back in November so I asked the examinators if I could downgrade my level to absolute beginner. Unsurprisingly, I aced it as it. In March, I took the advanced beginner exam (A2) and also aced it which was also not a surprise considering I was originally planning to take the exam back in November.

I plan to continue my studies in French for the next year and take the “lower intermediate” (B1) exam in the coming Fall. Lately, I’ve been debating if I should pick another language such as my mother tongue now that my parents will never know that I am learning it (I would hate if my parents find out because they would be so happy but it would make communication easier as we can stop goolging words and also stop relying on translations to communicate with each other. I am not too close to my parents so I never picked up the language). Though I don’t know how feasible it is to learn 2 languages, learn CS, and Math would be … very difficult to juggle.

Learning my mother tongue has always been in my list of to do but something I’ve put off since it wasn’t a priority. It sure would be less awkward when strangers communicate with me in Korean or request that I do translation for either a visiting or new Engineer from Asia since everyone assumes I’m not a native English speaker due to my mediocore English profeciency and name. Though if I was to pick up my mother tongue, it would only be till the latest available language exam available in the near available city before I return to school. Then I’ll place my studies on hold till after I become fluent in French. Though I do wonder where this thought to learn another language came from. Perhaps it’s my manager saying random Korean greetings to me (which I ignore because I’m not sure what to respond without revealing my equally terrible prononciation).

I want to conclude that Youtube Shorts are evil and Youtube should give users the option to disable this feature. Short form is ironically a big time consumer despite its length. It is just way too addicting.

DuoLingo Dynamic Icons on Android

May 6, 2025

Recently, I have been noticing that the icons for Duolingo changes throughout the day depending whether if I have done my daily exercise:

Originally I thought this feature is called adaptive icons due to its name but it is not.

In Android Development, there is a file that contains information about your app such as the permissions, minimum device versions, name of the app and etc in the AndroidManifest.xml.

This file also includes the icon to use for the app if provided. Apparently there is something called activity-alias

provides more flexibility and allows you to enter the same activity (i.e. think of it as a page or a view in the app). Essentially you can enable or disable different aliases to swap the icons via PackageManager.ComponentEnabledSetting and this is likely the approach that Duolingo

uses. They probably run some background process that is hopefully scheduled at certain periods of time such as WorkerManager.

I am not too familiar with Android development so most of this is just speculation. It’s been many years since I last wrote an Android Application and it was quite primitive … I hated Android Studio … it killed my poor underperforming laptop

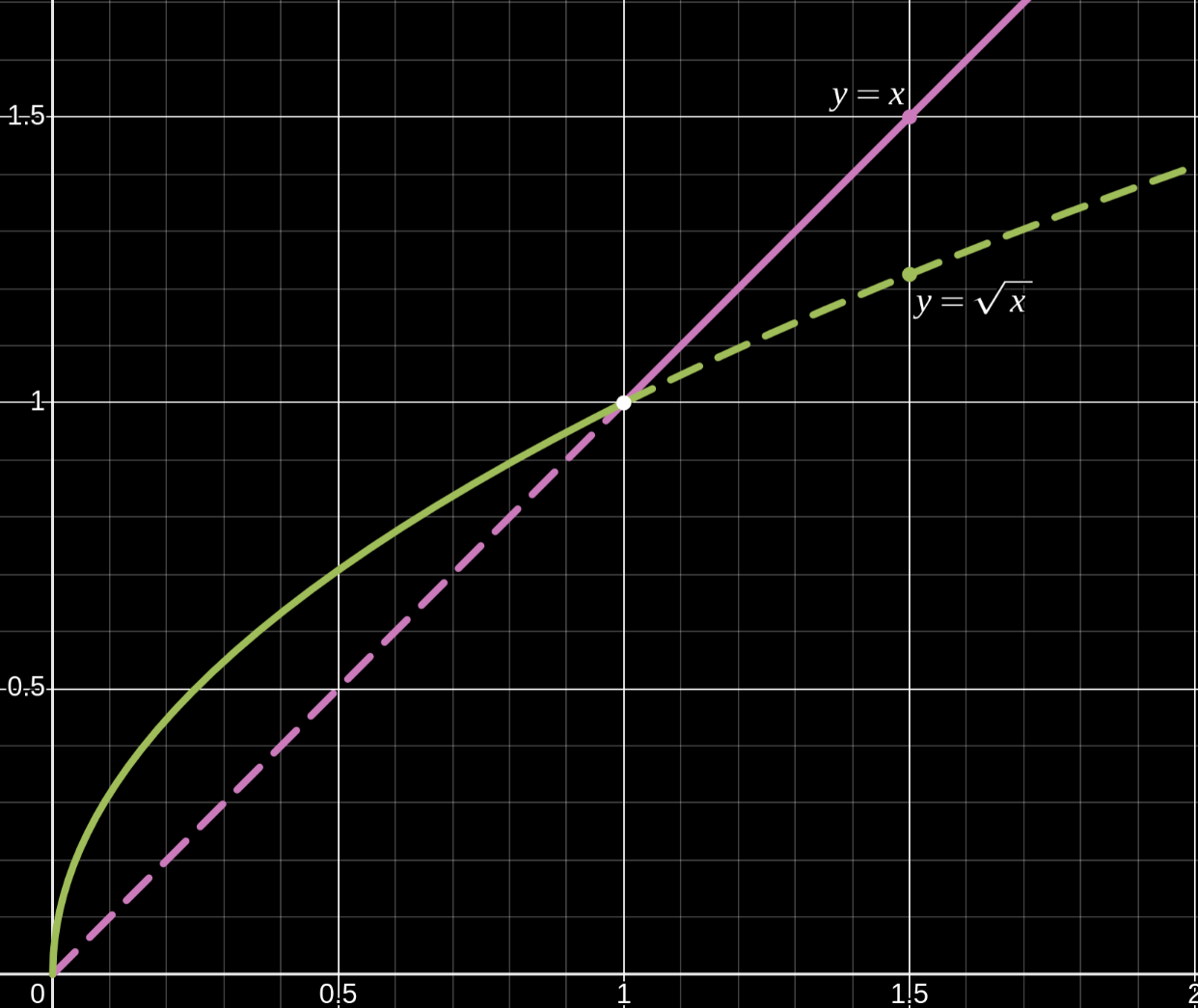

Behavior of Square Roots When x is between 0 and 1

April 14, 2025

Small numbers between 0-1 has always stumped me as it’s behaviors seemed unintuitive. For instance:

- $0.5^2 = 0.5 \cdot 0.5 = 0.25$

- $\sqrt{0.5} \approx 0.707106781$

which implies for $x \in (0,1)$:

- $x^2 \lt x$

- $\sqrt{x} \gt x$

I can rationalize in my head the first example by transforming the problem $x^2$ from an abstract equation into a more concrete word problem:

What is half of a half

Half is 50% and a half of 50% is 25%. While suffice enough to convince my brain, this lacks sufficient rigor in Mathematics. So let’s go through the proof a bit more formally as to why $x^2 \lt x$ because we’ll need this premise to explain why $x \lt \sqrt{x}$ for $x\in \{0,1\}$: